Native Advertising: Ad Agencies Dip Their Little Toes In The Deep End

Native Advertising as popularly defined (pick one) is nowhere near "the big idea", and further underscores a dark truth concerning the fate of every ad agency in the business.

As is often the case in the one-upsman world of advertising Native's definition is still in the land-grab phase. But in short:

In other words, theoretically without employing traditional interruptive tactics, advertisers would deliver brand messages in the form of - gasp - honest to goodness desirable content, products or services that users might be willing to seek out and pay money for, except that it's probably free.

In yet other words the same old ham-fisted, ad industry bozos are trying (still) to clod their way through yet another little bit of age-old interactive media obviousness as though it's some big new idea.

In truth, the underlying observations that have inspired today's "Native Advertising" breathlessness have been openly in place for over 15 years.

Native Advertising: Ad Agencies Dip Their Little Toes In The Deep End

Native Advertising as popularly defined (pick one) is nowhere near "the big idea", and further underscores a dark truth concerning the fate of every ad agency in the business.

As is often the case in the one-upsman world of advertising Native's definition is still in the land-grab phase. But in short:

In other words, theoretically without employing traditional interruptive tactics, advertisers would deliver brand messages in the form of - gasp - honest to goodness desirable content, products or services that users might be willing to seek out and pay money for, except that it's probably free.

In yet other words the same old ham-fisted, ad industry bozos are trying (still) to clod their way through yet another little bit of age-old interactive media obviousness as though it's some big new idea.

In truth, the underlying observations that have inspired today's "Native Advertising" breathlessness have been openly in place for over 15 years.

And while there is clearly valid intent embedded in the notion of a kind of "native" solution, this current set of native advertising definitions are all somewhat on the incomplete side.

Why should this trickling acceptance of reality have taken a young voter's entire life span?

I strongly believe it's because, by their very design, ad agencies are built, trained and honed to do one thing well: interrupt the consumer experience with a message of value that is itself just valuable enough to keep viewers from looking away.

The entire 100+ Billion dollar industry. Staffed, funded, and optimized. That's what they do.

And that singular capability is entirely misaligned with the very fundamental principles of interactive media. The future of media.

Think about that - Ad agencies are the wrong tool for the future.

It's just a whole lot easier to sneak an ad in front of a captive audience, an ad that is just good enough while it sufficiently delivers its brand message that people don't get up and leave, than it is to create something so valuable and magnetic that a regular person will seek out, be willing to pay for, and enjoy it.

Not surprisingly, this truth doesn't get talked about much in ad circles.

I know, I've heard it, "good advertising IS valuable", "Lots of people watch the Super Bowl for the amazing spots", "People in the UK go to the theater early to watch the commercials", and "My wife buys fashion magazines for the ads."

Memes that keep an industry of frustrated creatives from feeling the need to get into real content industries.

In reality, lots of people watch the Super Bowl (real content), so advertisers spend way more money on those ads which invariably suck less - but those same viewers would be just fine watching the game without interruption. People in the UK are just as annoyed as people in the US when they pay for a movie (real content), show up on time and are stuck watching 20 minutes of commercials. And your wife would be quite pleased if the magazine provided more fashion review and commentary (again, real content) in place of those ads.

At this point in the conversation my advertising friends point at Old Spice Man.

Jesus. Yes, there is a type of freakery along every skew of humanity, ads that become eagerly shared being one of the very rarest. Every 6-7 years there is one Old Spice Man. That is not a repeatable, sustainable solution. A meaningless blip on a radar that is otherwise teaming with actual useful data that is being openly ignored.

Don't you wonder why there are so few wildly successful ads in the interactive space? Don't you ever wonder why? I mean these aren't just random people making YouTube cat videos. These are paid professionals who are theoretically masters of their art form. Why then is advertising in interactive not more obviously successful and coveted?

Periodically advertisers try to acknowledge this disconnect and do tip toe into the deep end with what seem like penetrating PowerPoint decks, that try to sound all hard, hip and anarchic, generally stating that today's busy, connected consumers are just disinterested in brands and ad messages altogether. And I guess this must feel like a cathartic, even maverick, stab at the truth. But these are ultimately impotent decks, never going all the way. Always falling short of any real disruption. Never willing to upturn their own boat to reveal the utter brokenness of their paycheck. These exercises (and all ad agencies toy with presentations like these) end the same way, with some softball, vaguely nuanced adjustment to the old ad models.

Because those few that do look critically, all the way under the rug with open eyes, see a slightly horrific slippery slope that ends with upheaval. The implication that the industry is no longer built on solid ground. That the very ad agency infrastructure is literally not aligned on the foundation of the future.

That creative directors, art directors, copywriters and producers - are the wrong people. The wrong people to invent the solutions - helping companies evangelize their offerings into interactive media and extend awareness through the social spaces of the future. (planners do have a role however, more on that later)

From where I sit, all this agency hyperventilating of the virtues and potential of "Native Advertising" is just little more than the dozy ass-scratch of a sated, comfortable industry that hasn't yet felt the crunch of the iceberg needed to rouse from its operational hammock-basking.

Why bother? When we can rely on the apparent solid ground of past innovations?Yes - there are a lot of hard working creative people in advertising - but they are generally working below this line. They are working within the Matrix, below pointed, self-critical analysis and reinvention of the industry's very models and structure. It's reason for being.

The industry chose the blue pill.

A Reboot is Needed

In software, developers of big systems spend a relative long time nursing legacy code over time, modifying and amending to adapt it to a changing world. But there comes a point where it becomes unwieldy and inefficient, where the originating code base is no longer relevant, where its developers have to step back and ask "if we were building an ideal system from scratch today, would this be it?" When the answer stops being "possibly", then the legacy design usually gets retired.

The same must be asked of legacy business processes.

Clients and agencies need to ask the same question of the existing agency business and infrastructure. Big gains will come from reinventing it, rebuilding it directly on the back of solid interactive principles.

This requires a reboot.

Following such a reboot a lot of good ad people will necessarily have to redirect their careers. And other new skill sets will suddenly be in high demand.

"Whoa, whoa whoa," you say, "Good lord man, you're wrong in this, surely. If all this were really true it would have been revealed before now. It would have been obvious. Clients wouldn't keep paying for interruptive ads. It never could have gone on this long.

"In fact by sheer virtue that clients keep paying to have the same agency conduits create and deploy traditional, interruptive models in interactive media - that must prove that it's still valid, right?"

No I don't believe that. The present economics, while very real today, create the convincing illusion that the industry must be right-configured. That it must be aligned with interactive media and therefore, the future of media. But this belief is little more than another kind of bubble. A bubble that was indeed solid at one time. Back when the Men were Mad. Except that today, the center has leaked out.

"But anyway," you assert, "you're missing the main point - lots of the ads do work for the most part, we get conversions! Definitive proof that everything is solid."

For now perhaps, and to a point. So what will pop the bubble? Mere discovery of the "new best" - a true native model. That's how tenuous this is.

At Lego they have a corporate mantra "only the best is good enough". We all aspire to that in many things. The implication of that line though is that there must something else, something other than the "best" that is considered by most others to be "good enough".

And today clients are willing to pay for our current best, which is good enough it seems to do the trick, while convincing us we're on the right track. But I strongly assert, it's not the best. There is a best that has been sitting in the wings (for 15 years!). Clients and consumers don't seem to know this best is an option, I assume because they haven't seen it yet.

Steve Jobs famously commented on innovating new solutions that "…people often don't know what they want until you show it to them." And so it goes here too.

Understanding "Native" - a New Best

To find a new best, we need to align ourselves firmly on the backbone of interactive media. So we need to know what interactive media really is.That awful definition of Native Advertising at the top of this page (courtesy of wikipedia - the expression of our collective psychosis) illustrates a pathetic lack of understanding.

"...a web advertising method..."

Web advertising? Really?

Is that the "medium" you ad guys are working in? The World Wide Web? Ok, so what do you call it when the user is offline, not in a browser, using an app? Or some new unknowable device? Does the ad method just stop working there? C'mon, you're thinking too small.

To find what's right, you have to ask yourself "what functionally defines this medium landscape?" What one feature is consistent across all states of the medium, the web on PC, the web on mobile, apps, socializing on various platforms, both connected and offline etc.? And what attribute differentiates the medium from all other mediums.

The main point of difference and the consistent theme across all states is that the user is in control.

User control is the primary function afforded by the computer. That is what the medium is. It is the medium of users. Usership is what we mean by "interactive".

Connectivity is merely the distribution of that control.

And we can't gloss over this: it's the user that is in control.

Not the content creators, certainly not the advertiser. No. Rather, content creators are just servants.

And that's why advertisers, beholden for all time to interruption, flounder.

So fundamental is that largely unspoken truth, that the user should be in control, that every single time a user is annoyed with an interactive experience, it can be directly attributed to a breakdown in compliance with this one paradigm. Every - time. Every time a content creator attempts to assert his intent, his goals upon the user - the user recoils with recognition that something feels very wrong.

For well over a decade and to anyone who would listen, I have called this paradigm The Grand Interactive Order. It's the first axiom of interactive. Really, it's old - but worth a read, I think.

The second axiom I call the Interactive Trade Agreement.

This describes how sufficient value is necessary for any interaction in the medium to transpire. Sound familiar?

Its another very old idea that nevertheless seemed lost on most advertisers for years - except that they now talk about Native Advertising which is directly rooted in compliance with this axiom.

The age of reliance on a captive audience is falling behind us. We can no longer merely communicate the value of clients and products; today our messages must themselves be valuable. Be good enough that they will be sought out. Today ads must have independent value - in addition to a marketing message. Because for the first time consumers have to choose our "ads" over other content.

This quote above was not part of a 2013 Native Advertising deck. Though it might as well have been. It was actually a thread from Red Sky Interactive's pitch deck made to a dozen fortune 500 firms between 1996 and 1999. It was philosophically part of Red Sky's DNA.

In the 90s these ideas largely fell on deaf ears. It sounded good, but it scared too many people. People who were still trying wrap their heads around click-throughs and that viral thing.

Indeed, Native Advertising is just the ad industry re-discovering these basic ideas, once again, 15 years later.

Perhaps you can see why I feel no pity as I contemplate the big ad agencies falling by the wayside. They have had so much time and resource to adapt - had they only bothered to develop a strong understanding of the medium.

Maybe they can still pull out of their disconnected nose-dive.

In the spirit of willingness to beat my head against a wall until they do, I will offer something more than criticism.

Native Marketing

First - we need to drop this Native "Advertising" thing. As I have argued - advertising is about interruption by design - and that's patently inauthentic.

However, advertising's larger parent, "Marketing" does make sense. Ultimately what we want to do is find an iconic term that will help us stay on target, and marketing in my mind is much more integrated into the process of conducting business than advertising is. Native "Business" might be an even truer expression, but for now let's sit in the middle with "Marketing".

Next, the people. The people working in advertising today are, by in large, just not trained in the disciplines that true native marketing demands.

Planners cross over, however. Planners must still do market research in the future, study behavior and psychographics and develop a strategic insight - an insight that informs the new creative teams.

To wit, gone are teams made up of Creative Directors and Art Directors and Copywriters. That's about communication of value. They'll still exist somewhere but they'll play a small service role.

True native solutions require the skills and sensibilities of the people who are experienced in creating businesses, content and products which - without the benefit of pre-aggregated viewers/users - people will pay for. These are silicon valley entrepreneurs, filmmakers, product designers, etc. These are the creative teams of the agency of the future, and they take the lead in development.

These teams must understand the client's business. Not just at it's surface - but thoroughly - every detail of sourcing, production, manufacturing processes and fulfillment. It's the only way a truly native solution can be conceived. Because remember - this is not about creating a communication of value, the new goal is to create value.

We are further not just creating value at random, We are creating value to help grow a client's business so the value we create must interlock into the client's business. To be authentic. To honor the axioms.

So our agency of the future would know enough about the company that realistic implications and cost of operation and fulfillment of any proposal will have been considered.

As such, the agency will supply a business plan - as part of their proposal.

Example - Cool Shoes

Let me put myself out there for criticism.

Below is an example of what I think qualifies as a truly native marketing solution.

Each part of the system I'm going to describe has been done. But never together as a singular execution, and never under the context of marketing a larger brand.

Let's pick a creative brand of footwear, like a Havaianas, a Nike, or a Converse. Cool brands and admittedly, those are always a little easier.

Part 1 - Product Integration

Today direct to garment printing is a generally straightforward affair. This is where a regular person can create artwork, and as an economical one-off job it can be printed professionally onto the fabric of the shoe, or flip flop rubber, or bag or shirt.

So a tool needs to be created for the products in question to allow users to upload art (and possibly even generate art), apply it to a template, and customize any other colors and features.

The company I co-founded created the first working version of Nike ID way back when, and Nike hasn't changed it much since. You still basically just pick colors and monogram words.

But this is the full expression of that original inspiration. This takes it about as far as it can go - short of structural design. And beyond color choices, allows for true creative ownership. And that's important.

This is about personalization, ownership and self expression. Factors that are critical when hoping to inspire engagement and later motivate sharing.

Naturally the user can then purchase their creation.

As I say, this is being done in places. And it's only part of the solution.

Part 2 - Contracting The Consumer

The next part gets interesting, this is where creators of personal designs can choose to put their design into our client's online store for others to browse and buy. All the social factors start to kick in here (such as following, commenting, rating etc). This is the platform on which a user can build an identity that raises his status.

But we go further, we allow the user to set a price, above ours, that his shoe design will cost. Normally we sell the product for $30 say, the user chooses $35. That margin on every sale goes straight back to the user.

Note - we are not paying the user to engage with our brand. What were doing is being honest and fair about the value that customer is providing our company.

What we've done here is create a platform where consumers are creators of our very products, and even paid employees of our company, albeit working on commission.

Again, all been done, but we are moving away from what has been done under the banner of a big brand, and moving into a business model.

Part 3 - Empowering Our Customer Contractors

Now that our customer has created a great design, and priced it in our store, we need to drop the third leg of the stool - we need to give him tools to further raise his status. To market his designs.

We create a tool that allows the customer to assemble posters, stickers, and movies, ads and spots. How the customer chooses to think of this is his call. But we provide a system that allows him to incorporate his design into artful executions - video of the design being printed on canvas, excellent typography, the ability to upload images and video of his own, access to a huge library of excellent music. In short we develop a small studio in a box. All the tools the customers needs to sell his own product to his own network. We must facilitate that.

Secondarily, like in the App Store, we can offer customers the ability to afford better placement in our storefront. They might even be allowed to trade sales dollars for that placement if they wish.

There are dozens of other ideas that can roll into such a system, but the above illustrates perhaps some basic parts.

I hope you can see that such a thing is a long way from an "ad campaign" even a so-called "native" one. Functioning together all three parts create a functional native ecosystem that centers around our client's business model with a symbiotic business model of its own. A system that will result in consumers meaningfully expressing themselves and investing in the brand, buying the products, and evangelizing on our client's behalf, by definition. Word will spread without a media buy because the system quite literally incentivises socializing, distribution of the message, and sales.

Going Native

This is just a starting point. And building in a payment scheme is not a defining feature of Native Marketing in my opinion. Rather there is a wide world of opportunity for smarter people than me if only agencies can wake up real fast to the true nature of the medium. That they will eventually be forced from accepting the advertising paradigm at face value, and the practice of interrupting consumers with creative yakking about the value or brands.

They must rebuild their position on the solid principles of interactive media - even though that means a significant shift in the skillsets required.

The promise of the medium is that anyone can become big, anyone can be in business, make money, solve problems, achieve fame, express themselves, become better, smarter and happier and it is your job as a Native Marketer to facilitate all of that for users on behalf of your clients' and their business models

.In the Grand Interactive Order you are lowly servants of our King, the User. You must provide him with value. Or you will be cast out.

That's as "native" as it gets.

And that, Mad Man, is the new deep end.

Messages From the Future: The Fate of Google Glass

Man, time travel sucks. I mean think about it, you know all this stuff- and I mean you really know this stuff, but of course you can't say, "You're wrong, and I know, because I’m from the future."

So you pretend like its just your opinion and then sit there grinding your teeth while everyone else bloviates their opinions without actually knowing anything. Of course my old friends hate me. I mean I was always a know-it-all, but I really do know it all this time, which must make me seem even worse.

Anyway I was catching up on current events and was surprised to realize that I had arrived here smack dab before Google started selling Glass.

Truth is, I'd actually forgotten about Google Glass until I read that they are about to launch it again. Which itself should tell you something about its impact on the future.

So here's the deal on Google Glass. At least as far as I know - what with my being from the future and all.

It flopped.

Nobody bought it.

Messages From the Future: The Fate of Google Glass

Man, time travel sucks. I mean think about it, you know all this stuff- and I mean you really know this stuff, but of course you can't say, "You're wrong, and I know, because I’m from the future."

So you pretend like its just your opinion and then sit there grinding your teeth while everyone else bloviates their opinions without actually knowing anything. Of course my old friends hate me. I mean I was always a know-it-all, but I really do know it all this time, which must make me seem even worse.

Anyway I was catching up on current events and was surprised to realize that I had arrived here smack dab before Google started selling Glass.

Truth is, I'd actually forgotten about Google Glass until I read that they are about to launch it again. Which itself should tell you something about its impact on the future.

So here's the deal on Google Glass. At least as far as I know - what with my being from the future and all.

It flopped.

Nobody bought it.

Oh sure they sold SOME. Ultimately Google Glass got used mostly by very specialized workers who typically operated in solitary and didn't have to interact with other humans. Of the general public, there were a few geeks, opportunistic future-seekers and silicon valley wannabes, who bought them to keep up with developments or hoping to look as "cool" as Sergey did when he was famously photographed sitting on the subway (some PR guy later admitted that the whole "I'm just a normal guy slumming on the subway looking like some hipster cyborg" thing was just an orchestrated Glass marketing ploy arranged by Googles PR firm) but they didn't. That's because none of those geeks were young, mincingly-manicured-to-appear-casually-hip, billionaires. No. They just looked overtly dorky and as I recall, slightly desperate for the smug rub off that comes with publicly flashing a "cool" new product. But that didn't happen for them. Quite the opposite.

Glass just smacked of the old I'm-an-important-technical-guy-armor syndrome. The 90's cellphone belt holster. The 00's blinky blue bluetooth headset that guys left in their ears blinking away even while not in use. And then Google Glass.

The whole "I'm just a normal guy slumming on the subway looking like some hipster cyborg" thing was just an orchestrated Glass marketing ploy arranged by Google's PR firm.

You know, sometimes you see a new innovation and it so upsets the world’s expectations, it's such a brilliant non sequitur, that you can't imagine the events that must have lead to such an invention. You wonder what the story was. The iPhone was one of those.

But Google Glass was so mis-timed and straightforward - the exact conversations that lead to it seemed transparent. In hindsight, they were just trying too hard, too early, to force something that they hoped would be a big idea - and eventually would be, if only a little over a decade later, by someone else.

Here's the scene:

Sergey and his hand-picked team sit in a super secret, man cave romper room on the Google Plex campus. Then Sergey, doing his best to pick up the magician's torch as an imagined version of Steve Jobs says:

"As we have long discussed, the day will come when no one will hold a device in their hand. The whole handheld paradigm will seem old and archaic. And I want Google to be the company that makes it happen - now. We need to change everything. I want to blow past every consumer device out there with the first persistent augmented reality solution. The iPhone will be a distant memory. Money is no object, how do we do it?"

And then within 10 minutes of brainstorming (if even), of which 8 mostly involved a geek-speak top-lining of the impracticality of implants, bioware and direct neural interfaces, someone on the team stands with a self-satisfied twinkle of entitlement in his eye stemming from his too good to be true ticket to Google’s billion-dollar playground wonder-world which he secretly fears is little more than the result of his having been in the right place at the right time and might rather be more imaginatively wielded by half a dozen brilliant teenagers scattered throughout that very neighborhood, let alone the globe, says:

"We can do this, think about it. We need to give the user access to visual content, right? And audio. And our solution must receive voice commands. So the platform that would carry all that must naturally exist close to each of the relevant senses - somewhere on the head. And that platform - already exists. (murmurs around the room) Ready? Wait for it... a HAT!"

A sniff is heard.A guy wearing a t-shirt with numbers on it says: "...Augmented Reality ...Hat?"

And then someone else, who is slightly closer to being worthy of his access to the Google moneybags-action playset, says, "No, not a hat… Glasses! Think about it - glasses have been in the public consciousness forever as a device for seeing clearly, right? Well, enter Google, with glasses... that let you see everything clearly, more... clearly."

Everyone in the room nods and smiles. Even obvious ideas can carry a certain excitement when you happen to experience their moment of ideation. This effect of course must be especially pronounced when you've passed through a recruitment process that inordinately reveres academic measures of intelligence.

Either that, or it was just Sergey’s idea from the shower that morning.

In any event, the iPhone was such a truly disruptive idea that one cannot as easily pick apart the thought process that lead to it. Too many moving parts. Too much was innovative.

But Glass was a simple idea. Not simple in a good way, like it solved a problem in a zen, effortless way. No, simple like the initial idea was not much of a leap and yet they still didn't consider everything they needed to.

What didn't they consider?

Well having seen it all play out, I'd say: Real people - real life. I think what Google completely missed, developing Glass in their private, billion dollar bouncy-house laboratory, were some basic realities that would ultimately limit adoption of Glass’ persistent access to technology: factors related to humanity and culture, real-world relationships, social settings and pressures, and unspoken etiquette.

Oh and one other bit of obviousness. Sex. And I mean the real kind, with another person’s actual living body - two real people who spend a lot of money to look good.

But I guess I get why these, of all über geeks missed that.

While admittedly, sunglasses have found a long-time, hard-earned place in the world of fashion as a "cool" accessory when well appointed and on trend, in hindsight, Google glass should not have expected to leap across the fashion chasm so easily. There are good reasons people spend umpteen fortunes on contact lenses and corrective eye surgeries. Corrective glasses, while being a practical pain in the ass also effectively serve to make the largest swath of the population less attractive.

Throughout history, glasses have been employed predominantly as the defacto symbol of unattractiveness, of loserdom. They are the iconic tipping point between cool and uncool. The thin line separating the Clark Kents from the Supermen. Countless young ugly ducklings of cinema needed only remove that awkward face gear to become the stunning beauty, the glassless romantic lead. How many make-over shows ADD a pair of glasses?

Throughout history, glasses have been employed predominantly as the defacto symbol of unattractiveness, of loserdom. They are the iconic tipping point between cool and uncool. The thin line separating the Clark Kents from the Supermen.

Sure, there are a few fetishists out there, but for every lover of glasses wearing geekery, there are a thousand more who prefer their prospective mates unadorned.

Leave it to a bunch of Halo-playing, Dorito-eating engineers to voluntarily ignore that basic cultural bias. And worse, to maybe think all they had to do was wear them themselves to make them cool somehow."

But didn't you SEE Sergey on the subway?" You ask. "He looked cool."

Well, Sergey had indeed been styled by someone with taste and has been valiantly strutting his little heart out on the PR runway in an obviously desperate effort to infuse some residual "billionaires wear them" fashion credibility into his face contraption.

But look at that picture again, he also looked alone, and sad.

And to think Google Glass was a really good idea, you sort of had to be a loner. A slightly sad, insecure, misfit. Typically riding the train with no one to talk to. Incidentally, later- before Facebook died, Facebook Graph showed that Glass wearers didn't have many friends. Not the kind they could hug or have a beer or shop with.

And to think Google Glass was a really good idea, you sort of had to be a loner. A slightly sad, insecure, misfit. Typically riding the train with no one to talk to.

Wearing Google Glass made users feel like they didn't have to connect with the actual humans around them. "I'm elsewhere - even though I appear to be staring right at you." Frankly the people who wore Google Glass were afraid of the people around them. And Glass gave them a strange transparent hiding place. A self-centered context for suffering through normal moments of uncomfortable close proximity. Does it matter that everyone around you is more uncomfortable for it?

At least with a hand-held phone there was no charade. The very presence of the device in hand, head down, was a clear flag alerting bystanders to the momentary disconnect. "At the moment, I'm not paying attention to you."

But in it’s utterly elitist privacy, Google Glass offered none of that body language. Which revealed other problems.

At least with a hand-held phone there was no charade. The very presence of the device in hand, head down, was a clear flag alerting bystanders to the momentary disconnect. "At the moment, I'm not paying attention to you."But in it’s utterly elitist privacy, Google Glass offered none of that body language.

In the same way that the introduction of cellphone headsets made a previous generation of users on the street sound like that crazy guy who pees on himself as he rants to no one, Google Glass pushed its users past that, occupying all their attention, their body in space be damned - mentally disconnecting them from their physical reality. With Glass, not even their eyes were trustworthy.

Actually, it was commonly joked that Glass users often appeared down right "mentally challenged" as they stared through you trying to work out some glitch that no one else in the world could see. They'd stutter commands and and tap their heads and blink and look around lost and confused.

Suddenly we all realized what poor multi-taskers these people really were.

Any wearer who actually wanted to interact with the real world quickly found they had to keep taking off their Google Glasses and stowing them, or else everyone got mad.

It was simply deemed unacceptable to wear them persistently. And in fact users reported to having been socially pressured to use them quite a lot as they had previously used their phones. Pulling them out as needed. Which utterly defeated the purpose. On some level - that's what broke Google Glass. It wasn't what it was supposed to be. It wasn't persistent. It was more cumbersome and socially uncomfortable than the previous paradigm.

People who left them on in social situations were openly called "glassholes".

People who left them on in social situations were openly called "glassholes".

They were smirked at, and laughed at walking down the street. I know because I did it too.

There were lots of news reports about people who got punched for wearing them in public. In fact, anecdotally, there were more news reports about people getting beat up for wearing Google Glass in public than I actually saw on the street wearing them. The court of public opinion immediately sided on the position that Google Glass was little more than some random stranger shoving a camera in your face. Other people stopped talking to wearers until they took them off. They didn't even want it on top of their heads.

In hind sight it was pretty quickly clear Google Glass wasn't going to be a revolution.

I read an interview somewhere (years from now) that someone on the Google team had admitted that they more than once asked themselves if they were on the right track - but that the sentiment on the team was that they were doing something new. Like Steve Jobs would have done. Steve Jobs couldn't have known he was on the right track any more than they did - so they pushed forward.

Except that I think Steve Jobs sort of did know better. Or rather, he was better connected to the real world than the boys at Google’s Richie Rich Malibu Dream Labs were. Less dorky and introverted, basically.

The problem with innovation is that all the pieces need to be in place. Good ideas and good motivation can be mistimed. Usually is. That's all Google Glass was. Like so many reasonable intentions it was just too early. Selling digital music didn't work until everything was in place - iPods and iTunes were readily available and insanely easy to sync. HDTV didn't hit until content and economics permitted. And the world didn't want persistent augmented reality when Google created Glass.

All the above disclosed, Augmented Reality is still indeed your future. It's just that when it finally comes, well, when it happened, it didn't look like Google Glass.

Like, at all.

And I know, because I'm from the future.

My First Message From the Future: How Facebook Died

It was a hot, sunny Boston morning in July, 2033 - and suddenly - it was a freezing London evening in Feb 2013, and I had an excruciating headache.

I have no clue what happened. No flash, no tunnel, no lights. It's like the last 20 years of my life just never happened. Except that I remember them.

Not knowing what else to do I went to the house I used to live in then. I was surprised that my family was there, and everyone was young again. I seemed to be the only one who remembers anything. At some point I dropped the subject because my wife thought I'd gone crazy. And it was easier to let her think I was joking.

It's hard to keep all this to myself though, so, maybe as therapy, I've decided to write it here. Hardly anyone reads this so I guess I can't do too much damage. I didn't write this stuff the first time around, and I'm a little worried that the things I share might change events to the point that I no longer recognize them, so forgive me if I keep some aspects to myself.

As it is I already screwed things up by promptly forgetting my wife's birthday. Jesus Christ, I was slightly preoccupied, I mean, I'm sorry, ok? I traveled in time and forgot to pick up the ring that I ordered 20 years ago… and picked up once already. All sorts of stuff changed after that for a while. But then somehow it all started falling back into place.

Anyway - that's why I'm not telling you everything. Just enough to save the few of you who read this some pain.

Today I'll talk about Facebook.

Ok, in the future Facebook, the social network, dies. Well, ok, not "dies" exactly, but "shrivels into irrelevance", which was maybe just as bad.

My First Message From the Future: How Facebook Died

It was a hot, sunny Boston morning in July, 2033 - and suddenly - it was a freezing London evening in Feb 2013, and I had an excruciating headache.

I have no clue what happened. No flash, no tunnel, no lights. It's like the last 20 years of my life just never happened. Except that I remember them.

Not knowing what else to do I went to the house I used to live in then. I was surprised that my family was there, and everyone was young again. I seemed to be the only one who remembers anything. At some point I dropped the subject because my wife thought I'd gone crazy. And it was easier to let her think I was joking.

It's hard to keep all this to myself though, so, maybe as therapy, I've decided to write it here. Hardly anyone reads this so I guess I can't do too much damage. I didn't write this stuff the first time around, and I'm a little worried that the things I share might change events to the point that I no longer recognize them, so forgive me if I keep some aspects to myself.

As it is I already screwed things up by promptly forgetting my wife's birthday. Jesus Christ, I was slightly preoccupied, I mean, I'm sorry, ok? I traveled in time and forgot to pick up the ring that I ordered 20 years ago… and picked up once already. All sorts of stuff changed after that for a while. But then somehow it all started falling back into place.

Anyway - that's why I'm not telling you everything. Just enough to save the few of you who read this some pain.

Today I'll talk about Facebook.

Ok, in the future Facebook, the social network, dies. Well, ok, not "dies" exactly, but "shrivels into irrelevance", which was maybe just as bad.

Bets are off for Facebook the company. I wasn't there long enough to find out - it might survive, or it might not, depends on how good they were… sorry, are at diversifying.

At this point perhaps I should apologize for my occasional shifting tenses. I'm finding that time travel makes it all pretty fuzzy. But I'll do my best to explain what happened... Happens. Will happen.

Anyway, seeing Facebook back here again in full form, I marvel at the company's ability to disguise the obviousness of the pending events in the face of analysts, and corporate scrutiny, with so many invested and so much to lose.

But hindsight being 20/20, they should have seen - should see - that the Facebook social network is destined to become little more than a stale resting place for senior citizens, high-school reunions and, well, people whose eyes don't point in the same direction (it's true, Facebook Graph showed that one, it was a joke for a while - people made memes - you can imagine). Grandmothers connecting with glee clubs and other generally trivial activities - the masses and money gone.

The Facebook social network is destined to become little more than a stale resting place for senior citizens, high-school reunions and, well, people whose eyes don't point in the same direction

There were two primary reasons this happened:

First - Mobile (and other changing tech - including gaming, iTV and VR). I know, I know I'm not the first, or 10,000th guy to say "Mobile" will contribute to Facebook's downfall. But there is a clue that you can see today that people aren't pointing out. While others look at Facebook with confidence, or at least hope, that Facebook has enough money and resources to "figure mobile out", they don't do it. In fact there is a dark secret haunting the halls of the Facebook campus. It's a dawning realization that the executive team is grappling with and isn't open about - a truth that the E-suite is terrified to admit. I wonder if some of them are even willing to admit it to themselves yet.

Here is the relevant clue - the idea that would have saved Facebook's social network, that would make it relevant through mobile and platform fragmentation - that idea - will only cost its creators about $100K. That's how much most of these ideas cost to initiate - it rarely takes more. Give or take $50k.

That's all the idea will cost to build and roll out enough to prove. 3-6 months of dev work. Yeah it would have cost more to extend it across Facebook's network. But that would have been easy for them. So, Facebook has gobs of $100Ks - why hasn't it been built yet?

The dark secret that has Facebook praying the world doesn't change too fast too soon (spoiler alert, it does), is that - they don't have the idea. They don't know what to build.

Let me repeat that, Facebook, the company, doesn't have the one idea that keeps their social network relevant into mobile and platform fragmentation. Because if they actually did… it's so cheap and easy to build, you would already see it. Surely you get that, right? Even today?

Perhaps you take issue with the claim that only "one idea" is needed. Or perhaps you think they do have the vision and it's just not so easy; it requires all those resources, big, complex development. And that today it's being implemented by so many engineers, in so many ways across Facebook with every update. Perhaps you will say that continually sculpting Facebook, adding features, making apps, creating tools for marketers, and add-ons, will collectively add up to that idea. This is what Facebook would prefer you believe. And it's what people hope I guess.

Well, that's not how it works. Since the days Facebook was founded, you have seen a paradigm shift in the way you interact with technology. And that keeps changing. I can report that the idea that will dominate within this new paradigm, will not merely be a collection of incremental adjustments from the previous state.

Hell, Facebook was one simple idea once. One vision. It didn't exist, and then it did(and it didn't even cost $100K). It answered a specific need. And so too will this new idea. It won't be a feature. It won't look like Facebook. It will be a new idea.

I know, I've heard it, "Facebook can just buy their way into Mobile". You've seen that desperation already in the Instagram land grab. It's as if Mark said "…oh… maybe that's it..?? …or part of it … Maybe…?"

Cha-ching.

The price was comically huge. Trust me, in the future a billion dollars for Instagram looks even dopier. How much do you think Instagram spent building the initial working version of Instagram? Well, I didn't work on it, but like most projects of their ilk I am willing to bet it was near my magic number: $100K. I read somewhere that Instagram received $250K in funding early on and I seriously doubt they had to blow through more than half that on the initial build.

And Facebook's desperate, bloated buy of Instagram is virtual confirmation of the point. See, you don't buy that, and pay that much, if you have your own vision. If you have the idea.

And Facebook's desperate, bloated buy of Instagram is virtual confirmation of the point. See, you don't buy that, and pay that much, if you have your own vision. If you have the idea.

Unfortunately, Facebook will eventually realize that Instagram wasn't "it" either. No, the idea that will carry social networking into your next decade of platform fragmentation and mobility isn't formally happening yet. Rather the idea that will make social connections work on increasingly diverse platforms will come about organically. Catching all the established players mostly by surprise. It will be an obvious model that few are thinking about yet.

And that leads us to the second, and most potent, reason Facebook withers - Age.

Facebook found it's original user-ship in the mid '00s. It started with college-age users and quickly attracted the surrounding, decidedly youthful, psychographics. This founding population was united by a common life-phase; young enough to be rebelling and searching for a place in the world they can call their own, and just barley old enough to have an impact on developing popular trends.

Well, it's been almost a decade for you now- time flies. Those spunky, little 20+ year-old facebook founders are now 30+ year-olds and Facebook is still their domain. They made it so. And they still live their lives that way. With Facebook at its center.

But now at 30 things have started to change - now they have kids. Their kids are 6-12 years-old and were naturally spoon-fed Facebook. That's just the nature of life as a child living under Mom and Dad. You do what they do. You use what they use. You go where they go. Trips to the mall with Mom to buy school clothes. Dad chaperoning sleep-overs. Messages to Grandma on Facebook. It's a lifestyle that all children eventually rebel against as they aggressively fight to carve out their own world.

So give these kids another 6 years, the same rules will apply then. They'll be full-blown teenagers. They started entering college. They wanted their own place. And importantly, they inherited your throne of influence for future socializing trends. Yup, the generation of Mark Zuckerburgs graduated to become the soft, doughy, conventionally uncool generation they are... or rather, were, in the future.

So project ahead with me to that future state, do you really think Facebook is going to look to these kids like the place to hang out?? Really? With Mom and Dad "liking" shit? With advertisers searching their personal timelines?

No - way.

So project ahead to that future state, do you really think Facebook is going to look to these kids like the place to hang out?? Really? With Mom and Dad "liking" shit? With advertisers searching their personal timelines?No - way.

Don't even hope for that. See, the mistake a lot of you are making is that Facebook was never a technology - for the users, Facebook has always been a place. And 6-7 years from now these kids will have long-since found their own, cooler, more relevant place - where Mom and Dad (and grandma, and her church, and a gazllion advertisers) aren't. And it won't be "Social Network Name #7", powered by Facebook (but Facebook tries that - so I bought their URL yesterday - I recall they paid a lot for it). You will find it to be a confoundedly elusive place. It will be their own grass-roots network - a distributed system that exists as a rationally pure mobile, platform-agnostic, solution. A technically slippery, bit-torrent of social interaction. A decisive, cynical response to the Facebook establishment, devoid of everything Facebook stood for. At first it will completely defy and perplex the status quo. That diffused, no-there-there status makes advertisers crazy trying to break in to gain any cred in that world. But they don't get traction. The system, by design, prohibits that. At least for a year or two. Not surprisingly some advertisers try to pretend they are groups of "kids" to weasel in, and it totally blows up in their faces. Duh. It will be a good ol' wild west moment. As these things go. And they always do go. You've seen it before. And the kids win this time too.

It will be their own grass-roots network - a distributed system that exists as a rationally pure mobile, platform-agnostic, solution. A technically slippery, bit-torrent of social interaction.

Then a smart, 20-year-old kid figures out how to harness the diffusion in a productized solution. Simply, brilliantly, unfettered by the establishment.

And at this point, you might say - "… well… Facebook can buy that!"

Sorry, doesn't happen. I mean, maybe it could have, but it doesn't. Don't forget, Yahoo tried to buy Facebook for a Billion Dollars too.

For a kid, the developer of this new solution is shrewd, and decides that selling out to Facebook would weaken what he and his buddies built - rendering it immediately inauthentic.

Seeing the power of what he holds, this kid classically disses Mark's desperate offer. It's all very recursive, and everyone wrote about that. My favorite headline was from Forbes: "Zucker-punched". And anyway, Google offers him more (which is not a "buy" for Google - later post).

Look, it doesn't matter, because at that point Facebook is already over because Facebook isn't "where they are" anymore.

Their parents, Facebook's founding user-base, stay with Facebook for a while and then some, those who still care how their bodies look in clothes (again Facebook's Graph, famously showed this), will switch over presumably because they suddenly realized how uncool Facebook had become. Then even more switched because they needed to track their kids and make sure they were not getting caught up in haptic-porn (something I actually rather miss now). And that kicks off the departure domino effect (or "The Great Facebalk", The Verge, 2021 I believe).

Later, Grandma even switches over. But some of her friends are still so old-timey that she'll keep her Facebook account so she can share cat pictures with them. And of course, she won't want to miss the high-school reunions.

some of [Gramma’s] friends are still so old-timey that she'll keep her Facebook account so she can share cat pictures

So that is Facebook's destiny. And you know, I am from the future. So I know.

Oh one last thing, in Petaluma there's a 14 year-old kid I bumped into the other day - quite intentionally. He's cool. He's hungry. When he turns 20, I plan on investing exactly $100K in some crazy idea he'll have. I have a pretty good feeling about it. I'll let you know how it goes.

Why Apple's Interfaces Will Be Skeuomorphic Forever, And Why Yours Will Be Too

"Skeuomorph..." What?? I have been designing interfaces for 25 years and that word triggers nothing resembling understanding in my mind on its linguistic merit alone. Indeed, like some cosmic self-referential joke the word skeuomorph lacks the linguistic reference points I need to understand it.

So actually yes, it would be really nice if the word ornamentally looked a little more like what it meant, you know?

So Scott Forstall got the boot - and designers the world over are celebrating the likely death of Apple's "skeuomorphic" interface trend. Actually I am quite looking forward to an Ive-centric interface, but not so much because I hate so-called skeuomorphic interfaces, but because Ive is a (the) kick ass designer and I want to see his design sensibility in software. That will be exciting.

And yet, I'm not celebrating the death of skeuomorphic interfaces at Apple because - and I can already hear the panties bunching up - there is no such a thing as an off-state of skeuomorphism. That's an irrelevant concept. And even if there was such a thing, the result would be ugly and unusable.

Essentially, every user interface on Earth is ornamentally referencing and representing other unrelated materials, interfaces and elements. The only questions are: what's it representing, and by how much?

Why Apple's Interfaces Will Be Skeuomorphic Forever, And Why Yours Will Be Too

"Skeuomorph..." What?? I have been designing interfaces for 25 years and that word triggers nothing resembling understanding in my mind on its linguistic merit alone. Indeed, like some cosmic self-referential joke the word skeuomorph lacks the linguistic reference points I need to understand it.

So actually yes, it would be really nice if the word ornamentally looked a little more like what it meant, you know?

So Scott Forstall got the boot - and designers the world over are celebrating the likely death of Apple's "skeuomorphic" interface trend. Actually I am quite looking forward to an Ive-centric interface, but not so much because I hate so-called skeuomorphic interfaces, but because Ive is a (the) kick ass designer and I want to see his design sensibility in software. That will be exciting.

And yet, I'm not celebrating the death of skeuomorphic interfaces at Apple because - and I can already hear the panties bunching up - there is no such a thing as an off-state of skeuomorphism. That's an irrelevant concept. And even if there was such a thing, the result would be ugly and unusable.

Essentially, every user interface on Earth is ornamentally referencing and representing other unrelated materials, interfaces and elements. The only questions are: what's it representing, and by how much?

Essentially, every user interface on Earth is ornamentally referencing and representing other unrelated materials, interfaces and elements. The only questions are: what's it representing, and by how much?

For example, there is a very popular trend in interface design - promoted daily by the very designers who lament Apple's so-called "skeuomorphic" leather and stitching - where a very subtle digital noise texture is applied to surfaces of buttons and pages. It's very subtle - but gives the treated objects a tactile quality. Combined with slight gradient shading, often embossed lettering and even the subtlest of drop shadows under a button, the effect is that of something touchable - something dimensional.

Excuse me, how can this not be construed as skeuomorphic?

Is that texture functional - lacking any quality of ornamentation? Is the embossing not an attempt to depict the effect of bumps on real world paper? Are the subtle drop shadows under buttons attempting to communicate something other than the physicality of a real-world object on a surface, interacting with a light source that doesn't actually exist? The most basic use of the light source concept is, by definition skeuomorphic.

Drop shadows, embossing, gradients suggesting dimension, gloss, reflection, texture, the list is endless… and absolutely all of this is merely a degree of skeuomorphism because it's all referencing and ornamentally rendering unrelated objects and effects of the real world.

And you're all doing it.

This whole debate is a question of taste and functional UI effectiveness. It's not the predetermined result of some referential method of design. So when you say you want Apple to stop creating skeuomorphic interfaces - you really don't mean that. What you want is for Apple to stop having bad taste, and you want Apple to make their interfaces communicate more effectively.

So when you say you want Apple to stop creating skeuomorphic interfaces - you really don't mean that. What you want is for Apple to stop having bad taste, and you want Apple to make their interfaces communicate more effectively.

The issues you have had with these specific interfaces is that they either communicated things that confused and functionally misled (which is bad UX), or simply felt subjectively unnecessary (bad taste). And these points are not the direct result of skeuomorphism.

"But," you say, "I don't use any of that dimensional silliness. My pages, buttons and links are purely digital - "flat" and/or inherently connected only to the interactive function, void of anything resembling the real world, and void of ornamentation of any kind. Indeed, my interfaces are completely free of this skeuomorphism.”

Bullshit.

I'll skip the part about how you call them pages, buttons and links (cough - conceptually skeuomorphic - cough) and we'll chalk that up to legacy terminology. You didn't choose those terms. Just as you didn't choose to think of the selection tool in photoshop as a lasso, or the varied brushes, erasers and magnifying glass. That's all legacy - and even though it makes perfect sense to you now - you didn't choose that. Unless you work at Adobe in which case maybe you did and shame on you.

But you're a designer - and your interfaces aren't ornamental - yours are a case of pure UI function. You reference and render nothing from anywhere else except what's right there on that… page… er, screen… no ... matrix of pixels.

For example, perhaps you underline your text links. Surely that's not skeuomorphic, right? That's an invention of the digital age. Pure interface. Well, lets test it: Does the underline lack ornamentation, is it required to functionally enable the linking? Well, no, you do not have to place an underline on that link to technically enable linking. It will still be clickable without the underline. But the user might not understand that it's clickable without it. So we need to communicate to the user that this is clickable. To do that we need to reference previous, unrelated instances where other designers have faced such a condition. And we find an underline - to indicate interactivity.

"Wait," you say, "the underline indicating linking may be referencing other similar conditions, but it's pure UI, it's simply a best practice. It is not a representation of the real world. It's not metaphorical."

Nyeah actually it is. It just may not be obvious because we are sitting in a particularly abstract stretch of the skeuomorphic spectrum.

Why did an underlined link ever make sense? Who did it first and why? Well although my career spans the entirety of web linking I have no clue who did it on a computer first (anyone know this?). But I do know that the underline has always (or for a very looooong time) - well before computers - been used to emphasize a section of text. And the first guys who ever applied an underline to a string of text as a UI solution for indicating interactivity borrowed that idea directly from real-world texts - to similarly emphasize linked text - to indicate that it's different. And that came from the real world. We just all agree that it works and we no longer challenge it's meaning.

Face it, you have never designed an effective interface in your whole life that was not skeuomorphic to some degree. All interfaces are skeuomorphic, because all interfaces are representational of something other than the pixels they are made of.

Look I know what the arguments are going to be - people are going to fault my position on this subject of currency and and how referencing other digital interface conventions "doesn't count" - that it has to be the useless ornamental reproduction of some physical real-world object. But you are wrong. Skeuomorphism is a big, fat gradient that runs all the way from "absolute reproduction of the real world" to "absolute, un-relatable abstraction".

Skeuomorphism is a big, fat gradient that runs all the way from "absolute reproduction of the real world" to "absolute, un-relatable abstraction".

And the only point on that spectrum truly void of skeuomorphism is the absolute, distant latter: pure abstraction. Just as zero is the only number without content. And you know what that looks like ? It's what the computer sees when there's no code. No user interface. That is arguably a true lack of skeuomorphism. Or rather, as close as we can get. Because even the underlying code wasn't born in the digital age, it's all an extension of pre-existing language and math

Look at it this way - an iPad is a piece of glass. You are touching a piece of glass. So as a designer you need a form of visual metaphor to take the first step in allowing this object to become something other than a piece of glass. To make it functional. And that alone is a step on the skeuomorphic spectrum.

Sure you can reduce the silliness and obviousness of your skeuomorphism (good taste), and you can try to use really current, useful reference points (good UI), but you cannot design without referencing and rendering aspects of unrelated interfaces - physical or digital. And that fact sits squarely on the slippery slope of skeuomorphism.

I read a blogger who tried to argue that metaphoric and skeuomorphic are significantly different concepts. I think he felt the need to try this out because he thought about the topic just enough to realize the slippery slope he was on. But it ultimately made no sense to me. I think a lot of people want a pat term to explain away bad taste and ineffective UI resulting from a family of specific executions, but I don't think they have thought about it enough yet. Skeuomorphic is metaphoric.

OK so let's say all this is true. I know you want to argue, but come with me.

In the old days - meaning 1993-ish - There was something much worse than your so-called skeuomorphic interface. There were interfaces that denied the very concept of interface - and looked completely like the real world. I mean like all the way. A bank lobby for example. So you'd pop in your floppy disc or CD-Rom and boom - you'd be looking at a really bad 3D rendering of an actual bank teller window. The idea was awful even then. "Click the teller to ask a question" or "Click the stapler to connect your accounts".

And that was a type of "skeuomorphism" that went pretty far up the spectrum.

Back then my team and I were developing interfaces where there were indeed, buttons and scroll bars and links but they were treated with suggestive textures and forms which really did help a generation of complete newbie computer users orient themselves to our subject and the clicking, navigating and dragging. You would now call what we'd done skeuomorphism.

My team and I used to call these interfaces that used textures and forms, ornamentally suggestive of some relevant or real-world concept "soft metaphor interfaces". Where the more literal representations (the bank lobby) were generally called "hard metaphor interfaces".

These terms allowed for acknowledgment of variability, of volume. The more representative, the "harder" the metaphoric approach was. The more abstract, the "softer" it could be said to be.

these terms allowed for acknowledgment of variability, of volume. The more representative, the "harder" the metaphoric approach was. The more abstract, the "softer" it could be said to be.

To this day I prefer these qualifiers of metaphor to the term "skeuomorphic". In part because "skeuomorphic" is used in a binary sense which implies that it can be turned off. But the variability suggested by the softness of metaphor is more articulate and useful when actually designing and discussing design. Like lighter and darker, this is a designer's language.

I hope after reading this you don't walk away thinking I believe the leather and stitching and torn paper on the calendar app was rightly implemented. It wasn't - and others have done a solid job explaining how it breaks the very UX intent of that app.

But the truth is - there are times when some amount of metaphor, of obvious skeuomorphism in interface design makes tons of sense. Take the early internet. Back then most people were still relatively new to PCs. Ideas we take for granted today - like buttons, hover states, links, dragging and dropping, etc, was completely new to massive swaths of the population. Computers scared people. Metaphorical interfaces reduced fear of the technology - encouraged interaction.

And I think, as Apple first popularized multi-touch - an interface method that was entirely new - it made all the sense in the world to embrace so-called skeuomorphism as they did. I don't begrudge them that at all. Sure - there are lots of us that simply didn't need the crutch. We either grew up with these tools and or create them and feel a bit like it talks down to us. But Apple's overt skeuomorphic interfaces weren't really aimed at us.

Remember the launch of the iPad, where Steve Jobs announced that this was the "post PC era"? Apple didn't win the PC war - and instead deftly changed the game. "Oh, are you still using a PC? Ah, I see, well that's over. Welcome to the future." Brilliant!

But the population WAS still using a PC. And Apple, with it's overt skeuomorphic interfaces, was designing for them. Users who were figuratively still using IE6. Who were afraid of clicking things lest they break something.

These users needed to see this new device - this new interface method - looking friendly. It needed to look easy and fun. And at a glance, hate it though you may, well-designed metaphorical interfaces do a good job of that. They look fun and easy.

Communicating with your users is your job. And to do that you must continue to devise smart UI conventions and employ good taste - and that means choosing carefully where on the skeuomorphic spectrum you wish to design. Skeuomorphic is not a bad word. It's what you do.

Advertisers Whine: "Do Not Track" Makes Our Job Really Super Hard

So the Association of National Advertisers got it's panties all twisted in a knot because Microsoft was planning to build a "Do Not Track" feature into the next version of Internet Explorer - as a default setting. Theoretically this should allow users who use Explorer 10 to instruct marketers not to track the sites you visit, the things you search for, and links you click. A letter was written to Steve Ballmer and other senior executives at Microsoft demanding that the feature be cut because, and get this, it, "will undercut the effectiveness of our members’ advertising and, as a result, drastically damage the online experience by reducing the Internet content and offerings that such advertising supports. This result will harm consumers, hurt competition, and undermine American innovation and leadership in the Internet economy.” This is about a feature which allows you to choose not to have your internet behavior tracked by marketers. I'll wait till you're done laughing. Oh God my cheeks are sore.

Advertisers Whine: "Do Not Track" Makes Our Job Really Super Hard

So the Association of National Advertisers got it's panties all twisted in a knot because Microsoft was planning to build a "Do Not Track" feature into the next version of Internet Explorer - as a default setting. Theoretically this should allow users who use Explorer 10 to instruct marketers not to track the sites you visit, the things you search for, and links you click.

A letter was written to Steve Ballmer and other senior executives at Microsoft demanding that the feature be cut because, and get this, it: "will undercut the effectiveness of our members’ advertising and, as a result, drastically damage the online experience by reducing the Internet content and offerings that such advertising supports. This result will harm consumers, hurt competition, and undermine American innovation and leadership in the Internet economy.”

This is about a feature which allows you to choose not to have your internet behavior tracked by marketers. I'll wait till you're done laughing. Oh God my cheeks are sore.

And if the story ended here, I'd just gleefully use Explorer 10 and tell all the sputtering, stammering marketers who would dumbly fire advertisements for socks at me since I indeed bought some socks over 2 months ago indicating that I must be a "sock-buyer", to suck it up.

But the story does not end there.

The problem is that "Do Not Track" is voluntary. Advertisers are technically able to ignore the setting and do everything you think you are disallowing. The industry has only agreed to adhere to the Do Not Track setting if it is not on by default - only if it has been explicitly turned on by a human being which would indicate that this person really truly does not want to be tracked. A default setting does not "prove" this intention.

So when wind of Microsoft's plans became known Roy Fielding, an author of "Do Not Track" wrote a patch allowing Apache servers to completely ignore Microsoft's setting by default. In support of this Fielding states:

"The decision to set DNT by default in IE10 has nothing to do with the user's privacy. Microsoft knows full well that the false signal will be ignored, and thus prevent their own users from having an effective option for DNT even if their users want one."

So Microsoft - who may very well have been grandstanding with its default DNT to earn points with consumers - backed down and set it to off, by default. Now if you turn it on - theoretically it should work for those users. But of course now the same stale rule applies only in reverse, the DNT setting will be "off" for most users - not because the user chose that setting, but because the user likely didn't know any better - and presto - sock ads.

So the marketers breathe a sigh of relief. Crisis averted. Advertising's parasitic, interruptive, low-bar-creative business model can prevail.

At least it will work until the day comes that users all start using DNT. At which point we'll be right back here again with advertisers screeching that the whole thing is broken because it threatens the American way.

And if you've read any other posts on this blog you know I believe oppressive threat to the advertising business model is exactly what needs to happen.

At the end of the day - advertisers need to stop interrupting your attention and vying for surreptitious control over your privacy and your life.

The ad industry instead needs to learn how to create messages consumers actually want. Desirable, welcome things that don't naturally result in the vast majority of the population idly wishing there was a button to disallow it, as is the case today.If you are an advertiser you probably read this and have no idea what such a thing might be.

And that's the problem with your world view.

The Crowd Sourced Self

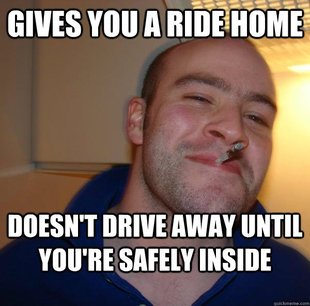

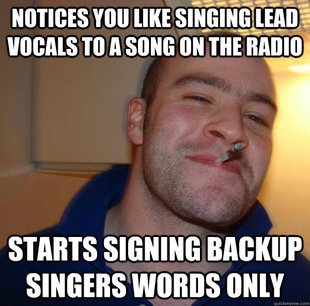

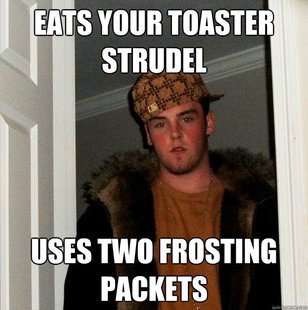

There is a guide available for anyone who wishes to learn how to be a better person. One that explains, in detail, what the greater population thinks is nobel, strong, and good. It also clearly illustrates the behaviors and traits that our society looks down on as weak and evil. If one were to follow the examples in this guide, one would make more friends, be more loved and trusted, and have more opportunity in life. It also shows why some people, perhaps unaware of this guide, are destined to be considered pariahs of society, doomed to a life of broken relationships, challenges and limits. I'm not talking about the bible. I, of course, am talking about the dueling memes, Good Guy Greg and Scumbag Steve.

The Crowd Sourced Self

There is a guide available for anyone who wishes to learn how to be a better person. One that explains, in detail, what the greater population thinks is nobel, strong, and good. It also clearly illustrates the behaviors and traits that our society looks down on as weak and evil. If one were to follow the examples in this guide, one would make more friends, be more loved and trusted, and have more opportunity in life. It also shows why some people, perhaps unaware of this guide, are destined to be considered pariahs of society, doomed to a life of broken relationships, challenges and limits. I'm not talking about the bible. I, of course, am talking about the dueling memes, Good Guy Greg and Scumbag Steve.

Created primarily as humorous, even juvenile, expressions of modern character archetypes, together these memes are actually far more than that.Together, these two memes illustrate the way to be. A surprisingly true, topical, crowd sourced opinion on what makes someone the best possible modern person and what makes someone a scumbag.

Good Guy Greg is a kind of ideal. The slacker Jesus of our disaffected generation. Good Guy Greg is the friend everyone wishes they had. A kind of righteous, loyal, non-judgmental, buddy superhero who always, without question, under any circumstances, can be counted on to do exactly the right thing by his friends. A self-less action hero of modern morality. The spirit of Good Guy Greg is your "do" list.

Scumbag Steve is Greg's opposite. In case a guide for what the modern internet generation wants in a friend is not enough, Scumbag Steve illustrates how not to be. His archetype is so painfully recognizable - his behavior, normally hidden from scrutiny by the confounding impermanence of real-time interaction and his subsequent denial, is exposed here, red-handed, for reflection and analysis. We now recognize you Steve, and no one likes you.

Good Guy Greg and Scumbag Steve are seething with relevant, contemporary trivialities which make their lessons completely identifiable and practical.In a way, through the humor, the world has created these Memes for exactly this reason - to show you the way to be.

The Secret to Mastering Social Marketing

Social Marketing is huge. It's everywhere. If you work in advertising today, you're going to be asked how your clients can take advantage of it, how they can manage and control it. There are now books, sites, departments, conferences, even companies devoted to Social Marketing.

Through these venues you'll encounter a billion strategies and tactics for taking control of the Social Marketing maelstrom. Some simple - some stupidly convoluted.

And yet through all of that there is really only one idea that you need to embrace. One idea that rises above all the others. One idea that trumps any social marketing tactic anyone has ever thought of ever.

It's like that scene in Raiders of the Lost Ark when Indy is in Cairo meeting with that old dude who is translating the ancient language on the jeweled headpiece that would show exactly where to dig. And suddenly it dawns on them that the bad guys only had partial information.

"They're digging in the wrong place!"

The Secret to Mastering Social Marketing

Social Marketing is huge. It's everywhere. If you work in advertising today, you're going to be asked how your clients can take advantage of it, how they can manage and control it. There are now books, sites, departments, conferences, even companies devoted to Social Marketing.

Through these venues you'll encounter a billion strategies and tactics for taking control of the Social Marketing maelstrom. Some simple - some stupidly convoluted.

And yet through all of that there is really only one idea that you need to embrace. One idea that rises above all the others. One idea that trumps any social marketing tactic anyone has ever thought of ever.

It's like that scene in Raiders of the Lost Ark when Indy is in Cairo meeting with that old dude who is translating the ancient language on the jeweled headpiece that would show exactly where to dig. And suddenly it dawns on them that the bad guys only had partial information.

"They're digging in the wrong place!"

Well if you are focused on social marketing strategies and tactics - you're digging in the wrong place.

You don't control social marketing. You don't manage it. You are the subject of it.The secret to mastering social marketing is this:

Make the best product, and provide the best customer service.Do this, and social marketing will happen. Like magic. That's it.

Make the best product, and provide the best customer service.There is no social marketing strategy that can turn a bad product or service into a good one. No button, no tweet, no viral video campaign, no Facebook like-count, that will produce better social marketing results than simply offering the best product and customer service in your category.

And if this whole outlook deflates the hopes you had when you began reading this, you are probably among those searching for some easy, external way of wielding new tools and associated interactions in order to manipulate potential customers. Of gaming the system. Sorry. You're digging in the wrong place.

Social marketing is just the truth. Or rather it needs to be. And any effort you put into manipulating that truth will undermine your credibility when it's revealed - because it will be. In fact, with rare exception, your mere intervention in the social exchange will be, and should be, regarded with suspicion.

Like when the other guy's lawyer tells you it's a really good deal - just sign here. O..kay...Take the recent case of Virgin Media. Reported to have some of the worst customer service satisfaction in the industry. Something I can personally attest to.

It took me three months, eight take-the-entire-day-off-work-and-wait-around-for-them-to-show-up-at-an-undisclosed-time appointments (three of which were no-shows) and countless interminable phone calls to their based-on-current-call-volume-it-could-take-over-an-hour-for-an-operator automated answering system, to install one internet connection. It then took an additional seven months (not exaggerating) to activate cable TV in my home (all the while paying for it monthly no less). But what makes this relevant was that after all the scheduling, rescheduling, no-shows, begging, re-rescheduling, being insulted, ignored and generally treated like a complete waste of the company's effort, the day I Tweeted that "Virgin Media Sucks!", I got an immediate response - in that public forum, not privately - feigning sincere interest in helping me.