What If A.I. Doesn’t Get Much Better Than This? (Rolls Eyes)

What If A.I. Doesn’t Get Much Better Than This? (Rolls Eyes)

A number of friends sent me this article today:

What If A.I. Doesn’t Get Much Better Than This?

Written by Cal Newport for The New Yorker, the article argues AI may rather be quite overhyped and references as an indication the underwhelming release of Chat GPT-5 which, the story claims, reveals limitations in language models. Indeed the article spends its length convincing readers that A.I. just isn't all that it's cracked up to be. The technology weaker, the market smaller, disruption less, your jobs more secure than hypists have lead you to believe.

A number of people have made similarly dismissive arguments over the last couple years, citing various research; before the release of GTP-5. And if you buy into the cold-water argument today, you would be forgiven for feeling relieved that maybe the world is not running as out of control as you feared.

But there is a problem with the argument and its accompanying sense of calm reassurance, and I'm honestly surprised so many are taking it at face value.

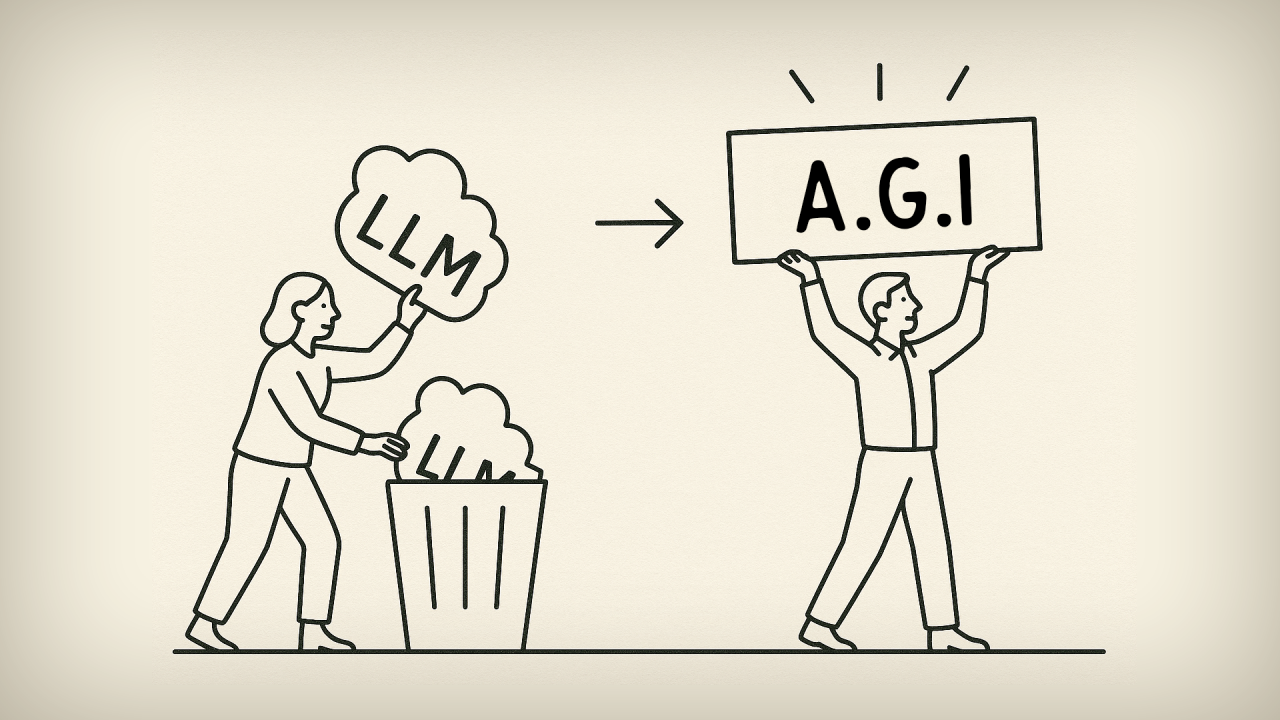

Throughout the article, including in the title, the author uses "A.I." and various forms of "language model" interchangably. Indeed the entire article purports to dispel the hype around "A.I." because language models have some hard limitations. Newport's not alone, many people use these terms interchangably today. Needless to say, these are not equivelent concepts. While it may be true that all neural language models are A.I., it's not necessarily true that all A.I.s are going to be neural language models. In defense one might point out that, today anyway, our only functioning A.I.s are language models, so what's the big deal? But there is a difference, a very relevant one. This is not a point Newport, nor those I've debated, sufficiently clarify because the answer essentially just reopens the topic of inevitability around exponential progress in A.I., and reasserts the very feelings of hype and optimism (or inutitive fear and dread, depending on who you are) the argument was attempting to dismiss.

During the wide adoption of "personal computers" and the Internet in the mid 1990s, there was a similarly breathless frenzy in media covering progress in technology and tech companies that, like today, seemed to take on a life of its own. Moore's Law was constantly in the news then as the primary driver of speculation in advancement. True to the prediction, chips were duitifully doubling their power every 18-24 months or so. But no sooner had the public wrapped our collective heads around the idea, than naysaying claims that the end of Moore's Law was in sight began publishing. 2012 was one year in particular I recall being cited as the inevitable end of advancement in silicon power. And there were many others. But one by one, the feared "end of silicon" deadlines passed. We may yet see an end to our advancement in silicon computing, but its looking more and more like what we will really do is switch to a new even more powerful domain: quantum. Such a thing would indeed mark the end of silicon advancement, but not a stagnation of computing at large. Quite the opposite.

The point here is that silicon is no more "computing" than language models are "A.I.".

What must be acknowledged is that every advance in our technology makes humanity subsequently ever better and faster at progressing the next step. Quantum computing would never be possible without silicon first. And every advance in silicon has made the next step more likely. So whatever methods humanity eventually derives that brings AGI and ASI, language models will have played a key role in exponentially speeding our progress to that state.

"Exponential" progress toward AGI does not simply survive or die at a single technology or method. The exponential impact of progress is the result of the collective of human technologies overlapping symbiotically and unexpectedly with distribution across greater and greater numbers of us as innovators.

So before you put your uncertainties and fears in the closet because language models "have a hard limitation", before you lower your guard to the disruption AI will inevitably bring to our jobs, lives and society at large, recognize that language models may merely be one ingredient in an exponentially speeding recipe that is still very much in progress right in front of you.

I don't know how else to say this - AI is not stopping, that subconcious clack clack clack keeping you awake at night is still the sound of the A.I. rollercoaster climbing up the hill.

In short: language model limitations or not, nothing has changed.

History has not much remembered those who've dismissively recited the list of limitations in technology's way. Words like "never", "impossible" and "can't" don't age well when technical progress is the subject and time is involved.

One way or another we tend to blow right through those limitations with unpredictable new ideas from unexpected places.

With language models, humanity is more able than ever to put arguments and articles like this one into the bin, at an exponential rate.

So while I appreciate the effort - thanks for trying, I for one, am back to being terrified.