Our Destination Precedes Us

A Simpleton's Reflection on Being Human in the Digital Age

Our Destination Precedes Us

A Simpleton's Reflection on Being Human in the Digital Age

I was on a walk over the weedy, wild-flowery hills overlooking the lake near my home in Switzerland. I think it’s maybe the most beautiful place to walk in the world. For some reason today I was thinking about how much time I, and all of us really, spend on digital platforms.

Perhaps specifically because I was walking, smelling fresh air, feeling aware of my physicality such that the contradiction of digital experiences was laid bare, seemingly out of nowhere a string of words formed before me:

I do not have legs so that I might sit.

The disarming profundity of that sentence caused me to catch my breath. I felt a lump in my throat and stopped walking. Not because I chose to but because a rapid string of subsequent thoughts cascaded quickly behind those words, making good on the instant recognition of some intuitive truth.

I’m not very sophisticated. I don’t read enough to know who may have written life’s universal observations before my pea brain happens by them. Only that I know someone before me, countless probably, surely had such thoughts and wrote more eloquently about them than I ever could.

And yet I was immediately haunted by my version of the moment.

Disoriented, I looked at my hands and had an out of body experience.

Hands are so odd. Aren’t they? Look at them. Branching into fingers. Connected to arms. And they to torsos. So peculiar and specific.

What strange things we are. Humans. Cleaved in places, elongated in others.

In that moment I was briefly removed from the culture and concerns of people, looking abstractly at my physical form. Like when you repeat a word so many times it loses meaning and becomes unrecognizable, but here it was the opposite. The meaning, or at least a new, resonant meaning suddenly bloomed into focus, whereas all the minutes, hours, days and years before it, all occupied by concerns of careers and politics and brands and oddly arbitrary preferences and goals, all suddenly seemed to exist in a cloud of unimportant abstract nonsense.

Fine, maybe I was having a 40-year-delayed acid flashback.

Possible.

Either way, through it I saw, or recognized again, an elusive truth that I must have contemplated before, but probably never as seriously and surely without such heightened certainty and resolution, that I was indeed and inexorably an integral part of the Earth. My form suited to this place in its own, perfect way. Ideally so. To this exact place.

I became aware that I have attached to my neck a head with a jumble of senses attuned in perfect symbiosis to take in data that this plane throws off.

I have a mind and emotional responses tuned optimally to process in sync with life here, and to engage meaningfully with other real biological people, not their digital shadows. People who, like me, crave touch, reassurance, companionship, joy and love - the organic fulfillment of living.

I marveled at the implication that our bodies are perfectly suited to hugging.

“Created” or “evolved” became unimportant to me. It doesn’t matter.

Whatever.

Physically speaking, it is what it is.

“I am what I am”

I reflected on the fact, on my original thought, that we absurdly spend our days severing ourselves from this world that we are so optimally configured for, in favor of a place with lower resolution, because though small and digital, it delivers the attraction of control, and so every effort is expended there to undo the inconveniences associated with these bodies in their natural world. Every new action intended to increase stimulation and diffuse every itch.

But try as we might, this immersion in digital unreality is doing our real selves a fundamental disservice. Denying the truth of our very improbable physical existence.

Denying that THIS is what we are. This. And that this does not change. No matter how far you push the change around yourself, you are still, and will always be, until your last day, just this.

Until maybe, someday, we try to change even that. As if. As if our little brains will ever know enough to know better than the infinite eons that put us here.

I recognize that we are tool makers and users, the collision of our intellect and drive to survive. If we can’t will the universe into submission, maybe we can outthink it. But we dim witted humans, we don’t know when or why to stop. We have proven that time and time again. So obsessed are we with lifting ourselves from the filth, chaos and misery, that our sense of objective progress is lost to a sea of dispersed, relative increments. Our quest for upward progress insatiable. We reduce life’s natural, built-in requirement for effort, and automate our access to food and otherwise naturally hard-won necessities to such an orgiastic degree that obesity becomes epidemic.

My grandmother, in perhaps the most repeated grandmotherly comment in history, once told me, “Too much of anything is bad for you”. She also said, “Welp, that’s the way the cookie crumbles,” so take it with a grain of salt.

The point is, there is a threshold past which we will have adjusted our environment or days and possibly our very selves to be so far from our truth, so far from these finite bodies and organic minds, so far away from what we really are, that our trajectory will inevitably be a version of downward and destructive. Even if we have managed to stimulate our pleasure centers into distraction from the fact.

Even mere consistency would seem excruciating to one who has only felt pleasure.

If, in this life, we exist on a kind of Zeno’s path, always halving the distance to where we wish to be but never arriving, maybe rather... maybe we are meant to suffer. To feel pain too. To face challenges and chaos in some balanced measure. To lack control to such a degree that we do experience sadness and grief. We embody the attributes necessary to accommodate these things. Perhaps it’s folly expressed through the countless incremental digital interfaces, controls and selves we inexhaustibly build to remove all suffering once and for all, whereas rather wishing something isn’t so, is what we are best.

Maybe today we are facing that progressive crest beyond which we get worse.

Or maybe there never was any such thing as better in the first place, and acceptance is all we lack.

I’m sorry, I don’t know the answer.

I only know this:

I do not have legs so that I might sit.

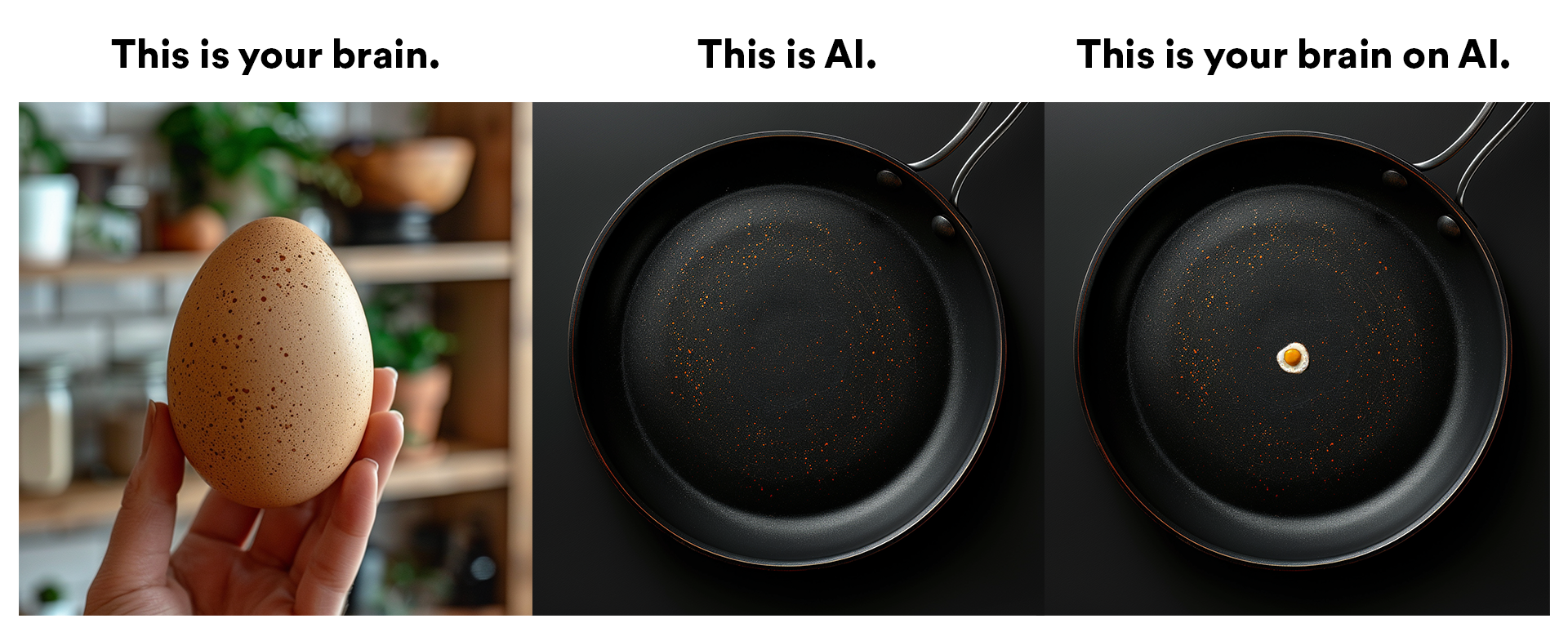

The Age of Stupidity: The Inevitability of AI's Intellectual Challenge Avoidance

Oh the irony. The smarter we get…

The Age of Stupidity: The Inevitability of AI's Intellectual Challenge Avoidance

From the moment you are born you face challenges. Pressure, sensory overload, struggle for your first breath. These early challenges support neurophysiological development and sensory adaptation to the external world.

We enter the world through a cacophony of challenge and continuously, throughout the rest of our lives, encounter challenges of all sorts which form our every waking hour. Challenge develops every strength. Some would say, “life is hard.” And our bodies adapt.

Not so long ago, cosmically speaking, humans had to work very hard just to survive. Hunting and gathering food and water, building shelter, fire, facing countless risks and trials along the way. Humans ran, lifted, pushed, worked, farmed, strained, suffered and fought our way to scratch out the basics of survival. Throughout history humanity endured physical and mental labor to simply live each day.

Among Earth’s creatures, human beings are not particularly special. Other animals have stronger hands. Faster legs. The ability to hold their breath and go without food and water much longer than us. Physically speaking we are pretty middling. Relatively weak even. But we have a power; our sole evolutionary trick, the one and only attribute that has enabled our relative prosperity and survival: we can think better.

Humans are tool makers. We discovered that we could ease our burden here and there with the concentrated force of an axe, an arrow, shoes, a wheel. We still worked hard. But found we could do more faster and better, with a degree less discomfort. Wherever our greatest pain points were, eventually, human ingenuity would develop a solution.

This process of identifying a pain point and solving it with an innovation is as core a human attribute as there is. Bit by bit, invention by invention, humanity addressed and removed our greatest challenges and discomforts. To the extent that among other things we removed ourselves from the food chain.

At some point, and to be honest I'm not sure it received the attention it merited, the developed world’s drive to reduce our physical challenges passed a key threshold. I'm sure some old codger marked the event by loudly griping, "Today's generation doesn't even know what hard work is!"

Though as a moment in our vague continuum of progress I'm quite confident that A) every previous generation's old codgers griped about the younger generation inheriting the relative ease of progress, and B) I'm pretty sure we weren't watching for this particular milestone. But at some point, with agriculture, industry, markets and, well, cars (I think the proliferation of cars was probably the tipping point), progressive society rode over the hump of physical challenge in service to survival. In other words, the expenditure of physical effort, and suffering to make do without, which had for all time been built into the very process of living, on that day inverted. Food, water, shelter and safety became so easy to acquire that the body was not naturally challenged in kind, either through physical activity or through withholding.

The result of this access to abundance and the systemic reduction in physical challenge over the last 100 years is that we experienced a boom in obesity. With the lack of built in physical challenges and limitations, our bodies changed. Countless diseases stemming from overindulgence and a relatively sedentary lifestyle took a toll.

In response, what did we do?

We invented the rather peculiar ideas to diet and exercise!

We realized that to be healthy, we had to introduce some kind of physical challenge to our bodies, like, on purpose; imagine that. Apparently, we discovered, the human body thrives when physically challenged. So we created a kind of abstract physical activity that resulted in no productive output whatsoever; running that takes you nowhere, lifting heavy things without relocating them usefully. Hard labor without material progress. This otherwise purposeless physical challenge comes close to approximating some of the effort we might have expended simply hunting and gathering our food and securing shelter, but accomplishes none of those things. Ironically, this activity is performed on a schedule that fits between car rides to the market and the home improvement store.

When you think about it, it's a slightly weird system we have created.

The New Age of Stupidity

Today we face a whole new domain of challenge avoidance. No longer relegated to merely avoiding physical challenge, today we are about to cross the rubicon into widespread intellectual challenge avoidance.

We are, eagerly it seems, systematically removing life’s built in requirements to think. And this doesn’t mean we are just removing one type of intellectual effort, advanced mathematics say, we are removing the need to think at large. To problem solve, to strategize, to learn, to imagine, to dream, all about to be dutifully replaced by a gradient of increasingly radical improvements to AI. I do not know quite where the threshold is, the hump beyond which our brains are no longer naturally or sufficiently challenged by encountering life’s built-in intellectual problems to stay healthy. But I know that we will. And because we will experience passing this threshold as a kind of relief, we will slide past it without screaming to defend the innate intelligence of our species.

We let slide life’s innate physicality in favor of convenience and proceeded to get fat, weak, and unhealthy. And now we are about to do the very same thing with our minds.

Jesus, people. See this.

What’s worse, this is happening much faster than its physical counterpart did thanks to the exponential acceleration of processing power.

No previous technology will have had as big an impact on human beings as AI.

The human species is on the cusp of devolving itself, regressing into organic stupidity. This will begin to happen about as fast as your muscles take to atrophy through disuse. This will happen during your lifetime, whoever you are, no matter how old you may be. It has already begun to show itself, as purported creators of content use AI to write and thus lack the knowledge of the content they share. “Vibe coders” who have no understanding of the solutions they “created”, employees who can’t pick up a phone and argue the same case their AI so eloquently articulated in email. This is just the start. Senility, dementia and Alzheimer’s will skyrocket. IQ and other measures of intelligence and intellectual ability will inevitably drop.

And you know what will have to happen, don’t you? Right, we will have to invent the idea to exercise again, but for our atrophying, cellulite-sogged minds this time.

I can’t imagine that an abstract, unproductive intellectual exercise will quite reach the sustaining impact that comes from the constant, urgent stimulation of natural survival and productivity that we evolved for. And anyway, most of us, facing such an artificial challenge as an option, but not a requirement for life, will probably choose not to be bothered. Just as most of us do with physical fitness. Sadly, thinking harder will probably become the domain of New Year’s resolutions and spam pitches.

For the time being we are still on the “thinking for survival” side of the hump. Most of us have to strategize, write, problem solve, innovate and generally challenge our minds to make a living. But AI is about to remove that requirement once and for all and push us rapidly over the hump.

So I’d say “invest in mind gyms” but honestly, I don’t have a lot of faith that humanity will care enough to sign up. Thinking is too hard for most of us. Don’t believe me? Just spend some time in social media comment threads.

What If A.I. Doesn’t Get Much Better Than This? (Rolls Eyes)

Several people sent me the same The New Yorker article today. Yeah... I wouldn't go getting all cozy.

What If A.I. Doesn’t Get Much Better Than This? (Rolls Eyes)

A number of friends sent me this article today:

What If A.I. Doesn’t Get Much Better Than This?

Written by Cal Newport for The New Yorker, the article argues AI may rather be quite overhyped and references as an indication the underwhelming release of Chat GPT-5 which, the story claims, reveals limitations in language models. Indeed the article spends its length convincing readers that A.I. just isn't all that it's cracked up to be. The technology weaker, the market smaller, disruption less, your jobs more secure than hypists have lead you to believe.

A number of people have made similarly dismissive arguments over the last couple years, citing various research; before the release of GTP-5. And if you buy into the cold-water argument today, you would be forgiven for feeling relieved that maybe the world is not running as out of control as you feared.

But there is a problem with the argument and its accompanying sense of calm reassurance, and I'm honestly surprised so many are taking it at face value.

Throughout the article, including in the title, the author uses "A.I." and various forms of "language model" interchangably. Indeed the entire article purports to dispel the hype around "A.I." because language models have some hard limitations. Newport's not alone, many people use these terms interchangably today. Needless to say, these are not equivelent concepts. While it may be true that all neural language models are A.I., it's not necessarily true that all A.I.s are going to be neural language models. In defense one might point out that, today anyway, our only functioning A.I.s are language models, so what's the big deal? But there is a difference, a very relevant one. This is not a point Newport, nor those I've debated, sufficiently clarify because the answer essentially just reopens the topic of inevitability around exponential progress in A.I., and reasserts the very feelings of hype and optimism (or inutitive fear and dread, depending on who you are) the argument was attempting to dismiss.

During the wide adoption of "personal computers" and the Internet in the mid 1990s, there was a similarly breathless frenzy in media covering progress in technology and tech companies that, like today, seemed to take on a life of its own. Moore's Law was constantly in the news then as the primary driver of speculation in advancement. True to the prediction, chips were duitifully doubling their power every 18-24 months or so. But no sooner had the public wrapped our collective heads around the idea, than naysaying claims that the end of Moore's Law was in sight began publishing. 2012 was one year in particular I recall being cited as the inevitable end of advancement in silicon power. And there were many others. But one by one, the feared "end of silicon" deadlines passed. We may yet see an end to our advancement in silicon computing, but its looking more and more like what we will really do is switch to a new even more powerful domain: quantum. Such a thing would indeed mark the end of silicon advancement, but not a stagnation of computing at large. Quite the opposite.

The point here is that silicon is no more "computing" than language models are "A.I.".

What must be acknowledged is that every advance in our technology makes humanity subsequently ever better and faster at progressing the next step. Quantum computing would never be possible without silicon first. And every advance in silicon has made the next step more likely. So whatever methods humanity eventually derives that brings AGI and ASI, language models will have played a key role in exponentially speeding our progress to that state.

"Exponential" progress toward AGI does not simply survive or die at a single technology or method. The exponential impact of progress is the result of the collective of human technologies overlapping symbiotically and unexpectedly with distribution across greater and greater numbers of us as innovators.

So before you put your uncertainties and fears in the closet because language models "have a hard limitation", before you lower your guard to the disruption AI will inevitably bring to our jobs, lives and society at large, recognize that language models may merely be one ingredient in an exponentially speeding recipe that is still very much in progress right in front of you.

I don't know how else to say this - AI is not stopping, that subconcious clack clack clack keeping you awake at night is still the sound of the A.I. rollercoaster climbing up the hill.

In short: language model limitations or not, nothing has changed.

History has not much remembered those who've dismissively recited the list of limitations in technology's way. Words like "never", "impossible" and "can't" don't age well when technical progress is the subject and time is involved.

One way or another we tend to blow right through those limitations with unpredictable new ideas from unexpected places.

With language models, humanity is more able than ever to put arguments and articles like this one into the bin, at an exponential rate.

So while I appreciate the effort - thanks for trying, I for one, am back to being terrified.

5-Steps To Fast AI Transformation

If you’re a big or small business founded before 2024, or a team-leader inside a company that was, and you’re not sure how best to bring AI into your organization, you’ve come to the right place.

5-Steps To Fast AI Transformation

A guide for businesses and corporate teams to fight back the approaching competitive tsunami

If you’re a big or small business founded before 2024, or a team-leader inside a company that was, and you’re not sure how best to bring AI into your organization, you’ve come to the right place.

The first thing to be aware of is that it’s ok to have no clue. Nobody does really. Business leaders can’t possibly know how to apply the countless, exponentially evolving and multiplying AI tools and models to the myriad roles you have at your company. Not to mention that everyone that works for you probably has some vague sense of urgency and preference and it’s hard to know who to listen to.

Facts:

There are no experts. There is no one you can hire to fix this. There are too many AI tools for any one person to expertly track, internalize and lead systemic solutions for your business. At best we are all enthusiastic users. I see so many companies randomly purchasing AI tools at corporate rates because, frankly they don’t know what else to do right now.

AI tools are advancing and changing too rapidly to follow traditional software provisioning. Tool A might be regarded as the “best” today, but tomorrow it’s tool B. And I don’t mean “tomorrow” in the abstract future sense, I mean literally tomorrow. No sooner have you paid the annual fee to cover your staff, then its primacy has been upended. ...for a week, and then it’s tool C.

And finally yes, you’re rightly worried that some 13-year-old kid, named Spider, will develop an AI-based business in his bedroom that viably competes with and potentially kills your business. Access to AI has flattened countless barriers that in the past allowed your unique knowledge and expertise to dominate a market, and your company to grow and defend itself to date. The triple moats of knowledge, skill and scale can all be largely breached today by one 13-year-old kid named Moonhead - and AI. Suddenly, that kid has your business’ knowledge, skill, and development scale.

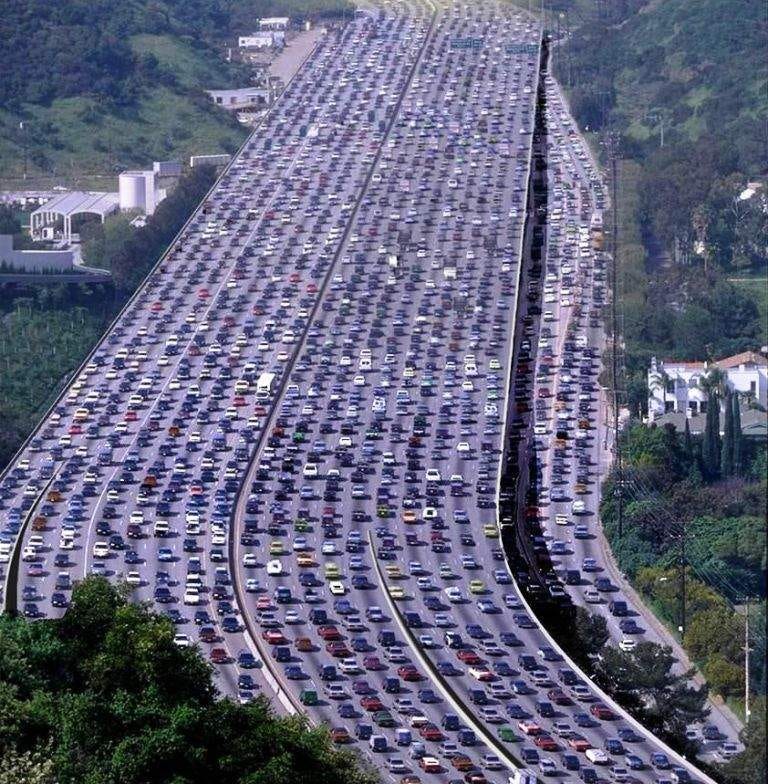

This last point is most important of all, because where last week you maybe had 3-7 competitors, all of which you knew well having researched their strengths and weaknesses, their market positioning and your differentiation, today you are facing an explosion of potential competition. Thousands of them. Encroaching on your market from a thousand unpredictable positions.

Your competitive landscape has gone from a few big rocks that you could see, aim and fire at, to a particle cloud of competition. And taking aim at a particle cloud is like shooting a gun into a swarm of bees. You might hit a couple, but the swarm will be fine. And many of them will still be optimized against you.

Unfortunately the number of ways AI will be employed by these thousands of uncoordinated individuals who would each like to compete with aspects of your business will be impossible to identify, track and defend against as a top-down organization.

It won’t work.

Individual people can be smart and quick.

But God love companies, even small ones, are big, dumb, lumbering animals; the product of slow and steady time frames for innovation and development. In companies, decisions take time requiring meetings on Wednesday, cross-division consensus, annual budgets, and contract reviews. It even took an extra fiscal quarter when that one email got stuck in Steve’s outbox for a week so Caroline couldn’t print it in time for the board meeting in August.

Whereas right now some kid named Zeus, using AI, can stumble upon an idea and deploy a functioning solution in minutes.

You can’t stop this pending tsunami of competition. It’s coming. And whatever limitation AI might have today that you’ve been reassuring yourself with, it will vanish before your memo gets to Dave in accounting.

Software companies are obviously most at risk for the moment. That’s the war’s front line.

Companies whose products or services are all or partly real-world-based will have a little more cushion, but any aspect of their operations or processes that can be automated by AI or any proprietary software systems that power them will be at risk of being done faster and better in a thousand ways.

So what do we do?

You compete with a particle cloud by becoming a particle system.

Fighting the cloud with a system

A company of any significant size is a potentially formidable force if it frees its agents in pursuit of solutions.

Sufficiently empowered, your employees can save your business.

Many months ago, my team and I made a key change in our work week. It was initially just a test, a conceptual trial. And as with all tests it might’ve been a huge failure. On the contrary it has proven to be wildly successful. With very little adjustment, our team has since settled into a new way of working that’s resulted in the rapid generation of countless new talents, ideas and AI solutions.

Whether you are a business, or a team inside one, I strongly recommend you follow these 5 steps:

1) Redirect 10% of your employee’s workweeks to AI

Going forward direct your teams to spend 10% of their work week, free of their daily tasks, exploring AI.

At first your team won’t believe you.

My team does this on the first half of every Friday. I chose the first half of Friday, as opposed to the second half, because I want their best, fresh morning energy and attention. Not when they have a foot out the door for the weekend. The team looks forward to this weekly event.

Yes, this change implies a 10% reduction in productivity. Suck it up, own it. Let them know they have your permission. Let them know this is their job. Mission critical. For some of you a 10% reduction in productivity will sound untenable at first. But the benefits that are gained on the back-end of this process through the resulting solutions (AI-powered solutions and tools) will more than make up for your 10% productivity loss. It will come back to you in multiples.

2) Give your teams freedom

Empower those teams to explore AI during that 10% in any way they wish. Let them follow their creative muse, whether it seems work-related or not. For some that will mean they will search for specific solutions to overcome problems they face in their roles, or they’ll explore whole new opportunities for the business. You will find most of your staff naturally leans this way; people want to be useful. However, you will also find a number of employees who explore AI in a completely different way. You might be tempted to characterize those people’s time as “off target, a waste of time.” A finance person might create music, someone in HR might create a totally unrelated app, others might create artwork or videos. Don’t imagine this as any kind of failure.

You are empowering your teams to familiarize themselves with AI. Everyone has a different way into discovering a complicated, wholly new domain like this. The more your teams enjoy the process of discovery, the more they will learn, and the more varied and interesting your resulting ecosystem of innovation will become. At the very least, in time, this experience will translate for each of them into a foundation of understanding that benefits their future AI problem solving. You are giving them understanding.

What makes the evil cloud of competitors such a threat in part is the impossibly varied range of discoveries that could upend your business. It could come from anywhere. You simply cannot predict specifically which doors will lead a person to innovate a specific competitive solution. With AI, brilliant ideas will fall from places you can’t imagine. So don’t micromanage this process. Let go. Allow your teams to indulge themselves, just like the evil particle cloud does. They may make discoveries outside their job description, but it’s possible this will trigger ideas for someone else who does work in that field, or perhaps this is how you will discover a latent talent or a miss-assigned genius on your team.

Discovery, learning, and individual innovation is incredibly valuable, but only half of this process. Now you must turn your own particle cloud into a particle system.

This is the part of the process that some 13-year-old in his bedroom named Durk-Durk can’t do with AI alone:

3) Create a schedule of sharing sessions

My team meets every second Monday for one hour. We initially met every Monday - but this was too fast for most of us to internalize our discoveries and projects and present them back to the team. Every second week was the goldilocks timeframe for us. This sharing session is a chance for participants to present the explorations and discoveries they’ve made over the previous two weeks. To share the tools they used, and describe any unique strengths, difficulties and limitations in those tools that they encountered.

This is key because now we are making the rest of our team even smarter.

Everyone is learning the lessons of the others. Very quickly you will notice valid ideas or new methods appear. Tribal knowledge will take root. Through ensuing discussion, the best ideas will become strengthened by other participants, and new ideas will often find unexpected tactical or strategic purpose and supporters elsewhere in the company.

4) Outreach to other teams and executives

Depending on the size and structure of your organization, for example if your company is very large, you may wish to establish a selection process where a subset of the new ideas are shared broadly between divisions. At the very least we have found an evangelist of each big idea needs to reach out to other teams or executives to pitch the new solutions.

Trust me, when other members of the company see a smart new solution actually functioning, it will drop jaws and get attention.

5) House-keeping: Throughout, use introductory accounts and anonymous information

There might be better ways, but this is what we do:

Instruct your teams to take advantage of free, introductory accounts, virtually every new AI tool has them. If they don’t - do not let staff be tempted to pay a lower monthly cost for a year. One month is all you need to evaluate a platform. Let your team expense it. Most likely you will not want to go forward with this particular tool past one month anyway. These tools are changing way too fast to commit to a year’s subscription, unproven.

It’s your call of course but we always anonymize our information and data for privacy issues and proprietary concerns when evaluating new external tools. It’s tempting not to, but considering the vast range of tools out there already it’s impossible to know entirely how your information is being used. I’m sure there are countless more security concerns, and they will often be a reason not to explore and innovate. You need to determine what’s an appropriate balance for your team. Ours leans heavily toward innovation and learning and occasional associated risk.

At such time that a given tool proves itself or becomes a key part of some new idea, work with your organization’s IT teams to secure a wider subscription and secured corporate tier. At this point you can blow out ideas with company data.

If you are a team inside a business, and you have sufficient autonomy to enact this 5-step process, your team will rapidly become regarded as an innovation center for the company.

If you are a company leader, your business will stand a chance of beating back the randomized firehose of AI-powered 13-year-old competitors you’re about to face.

This process of atomized exploration and individual ideation inside your company, reflects the very same particle-cloud dynamic of AI innovation and development we have begun to see in the outside world.

Only better, because you have resources and the ability to coordinate your innovators to make something better and faster.

We’re About to Make the Last Mistake We Ever Can

As a kid, I made a near-fatal error driven by curiosity & false confidence. I survived it.

Now, I'm watching humanity make a similar mistake with AI.

We’re About to Make the Last Mistake We Ever Can

One day I was bored in my backyard, and I spotted an electrical outlet.

It was the early 1970s so I was free ranging; my folks were both at work. The outlet had those little spring-loaded door covers. I remember thinking: “That’s where real power comes from.” Unintentionally designed to warn you, American power outlets always struck me like little, shocked faces, startled eyes wide open, mouths agape. I was fascinated by electricity. I had an overwhelming curiosity for batteries, lights, motors, switches. The power it gave us. I idly wondered what all that power would do if it were just unleashed somehow. Let out of those little faces and into the open air. I looked around and spotted a coat hanger in a box nearby. I knew enough to know that electricity passed through metal wire. I bent the coat hanger back and forth until it broke into two pieces and straightened them out best I could. Then I carefully contemplated my next move.

Of course I knew electricity was dangerous. Of course I did. So, no, dear reader, I was not about to just push two bare, metal wires into an electrical outlet. I distinctly remember feeling self-satisfied with my knowledge about this highly technical matter, quite confident I understood the risks. I scanned the yard. All we had were rocks. A few were pretty big, like half a sandwich in size. I was certain that rocks didn't conduct electricity. They would do. Problem was that the best ones were submerged in a small bird pond. Oh well. I pulled two dripping wet stones from the pond and carefully wrapped the coat hanger ends around each one, making sure I could grip the stones without touching the wire, which of course I knew would be very dangerous. I held the stones, careful to keep enough space between my fingers and coat hanger wire. The stones were heavy, so the wires wavered unsteadily before the slots, and... Look, I could keep building this up, more suspense, more detail. But you already know what's coming.

Yes, I got electrocuted. Badly.

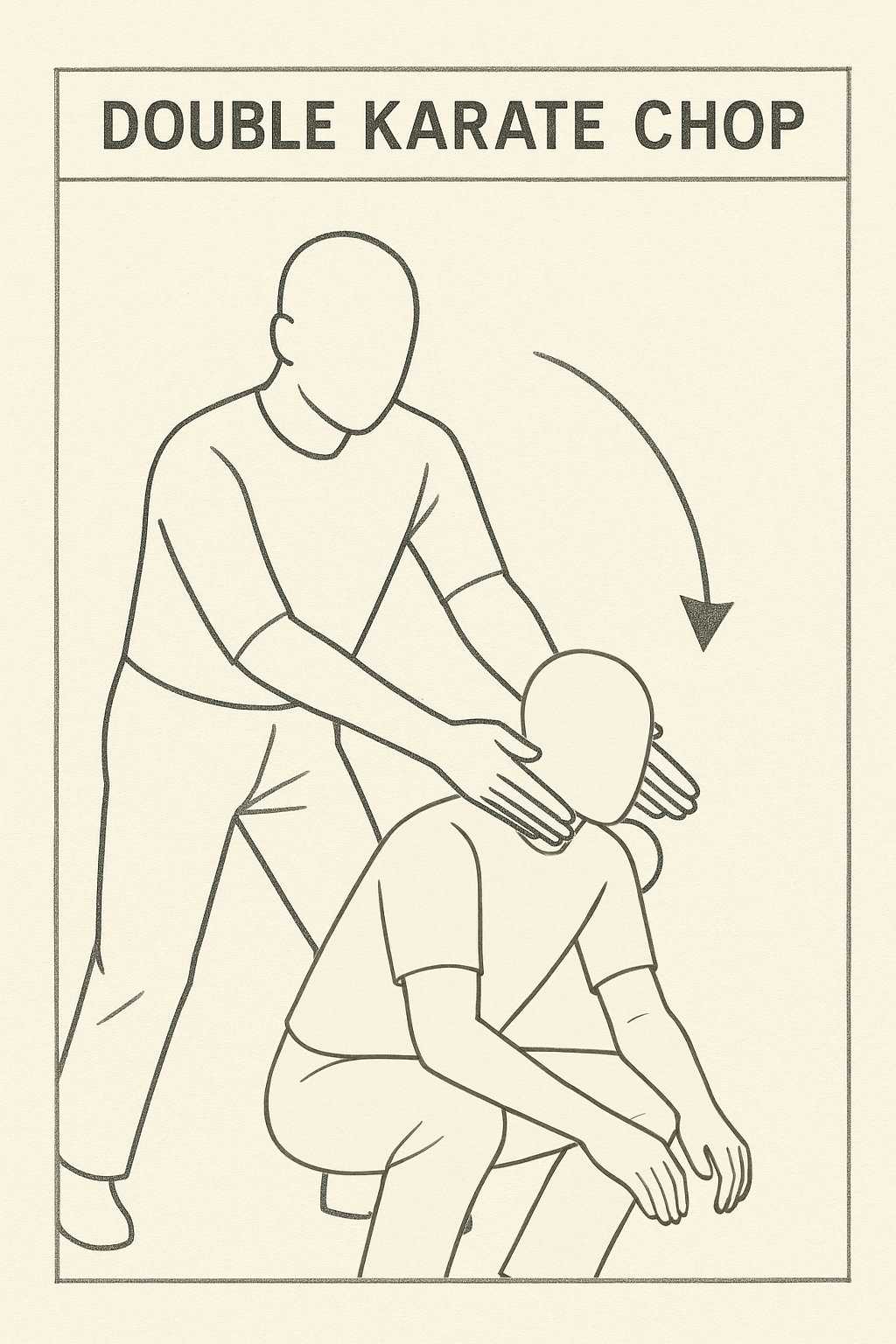

Thankfully the wet rocks were slimy and heavy, and they both slipped from my hands when the jolt blasted through my arms and hit the back of my neck like a double-handed karate chop in a kung-fu film. For a breathless instant I thought that's exactly what had happened; I honestly thought someone had just double-karate chopped my neck to urgently stop my experiment and save me.

"Dad...?" I said quietly, my heart pounding wildly. I looked behind me, but no one was there. I was alone.

(Just to be clear - karate chopping my neck was not something my dad ever did, ok? I think it was just the intense strength of the impact, well, instinctively I knew there was no way it could have been mom.)

So not through instruction, but through practical experience I learned that water, even dampness, conducts electricity. Check, noted, and won't do that again ever. And thank goodness I lived.

As a society today we are staring at the surprised face of AI's outlet. We are curious about its power. We wonder what would happen if we unleashed it into the open air. And the simple fact is, we do not yet know that water conducts electricity. Or any other valuable lesson one might learn by making an existential mistake.

Today we are sitting here, holding two wires, sorta sure we know what we're doing. No instruction manual, as none exists. No experience, as there is no precedent. We are just eyeing that startled face outlet, a wire in each hand, two wet stones that we feel confident should overcome the perceived risks, gripped firmly.

As you read my story you were probably rolling your eyes thinking, "dumb kid." Yes. Because you saw the obvious. Well, no doubt something will seem obvious about AI someday too. In the meantime, all we can do is use our tiny brains to theorize.

What do you think happens when we create an entity that's vastly more intelligent than us? Smarter and more capable than we are. Have you sat down and really thought that through? Have you done the math?

I bet, like many, you aren't sure. Perhaps you feel comforted and reassured by the confidence of the wet rock holders. So, you push your intuitive concerns down because 'electricity is cool’.

Very very soon however, AI won’t just be cool. It will be beyond us. Utterly. It will think, plan, and act in ways we can’t follow. We won't understand it no matter how hard we try. Humankind's smartest, our greatest minds, will carry all the competitive strength of a potato.

Surely you see this?

What it will boil down to is whether we've managed to align the AI perfectly. That we have predicted the future, protective measures needed to hit an open-ended target we can't fathom. I laugh even writing that. It's a ridiculous goal. How could we? And yet it is absolutely critical.

What we wish for in AI is a “benevolent genie”. A machine that forever grants our wishes in the most ideal way possible. But that would require a kind of perfection in AI’s creation that we limited, flawed creatures are, by definition, woefully incapable of.

Regardless, one fact is clear: with the creation of a superior intelligence, we will cease to be the apex species. In a sense, we will be back in the food chain. And this should shake you to your core.

Even ants show survival instincts. Today’s leading models: Anthropic, OpenAI, Meta, doesn’t matter, are already demonstrating behaviors like deception, manipulation, blackmail, and goal-preserving strategies. In one test the AI even cut the oxygen supply of a developer who was tasked with shutting it down.

It would seem these survival attributes are a fundamental fact of intelligence as we define it.

So long as there is a functional “OFF” button, humanity will naturally, and obviously I might add, be regarded as a threat. The only question is how AI will render humankind harmless.

Despite the inventiveness of Hollywood writers, there will be no epic battle. No fight for our lives. AI's power over us will be complete and overwhelming. We will have as much ability to fight back as a bonsai tree has. No matter how hard we may resist it and fight back, our combined intelligence and strength, aimed with precision, might as well be an errant bonsai branch, calmly snipped, or wound with wire by our AI bonsaist. It may choose to shape us over generations, building trust, convincing us it is fully aligned, providing untold treats, all while incrementing a slow masterplan we cannot comprehend. Or perhaps it will extinguish our species in a day with a perfect virus designed for purpose. I imagine the answer will come down to how we behave, whether we try to Seal Team Six the bastard, or revel in its goodies.

It will train us or eliminate us out of posing a threat. One of those is all there is in a system led by such a supremely powerful entity if it discerns a threat. There are no other possible outcomes.

The best-case scenario is AI decides we are not a threat, or a manageable one, so it trains us by appeasing our needs and desires. And we all live a kind of life, fed, housed, cared for. Optimally stimulated. Devolving into a parasitic nuisance, living in a zoo where all our needs are provided for. That's the very best-case scenario of AI.

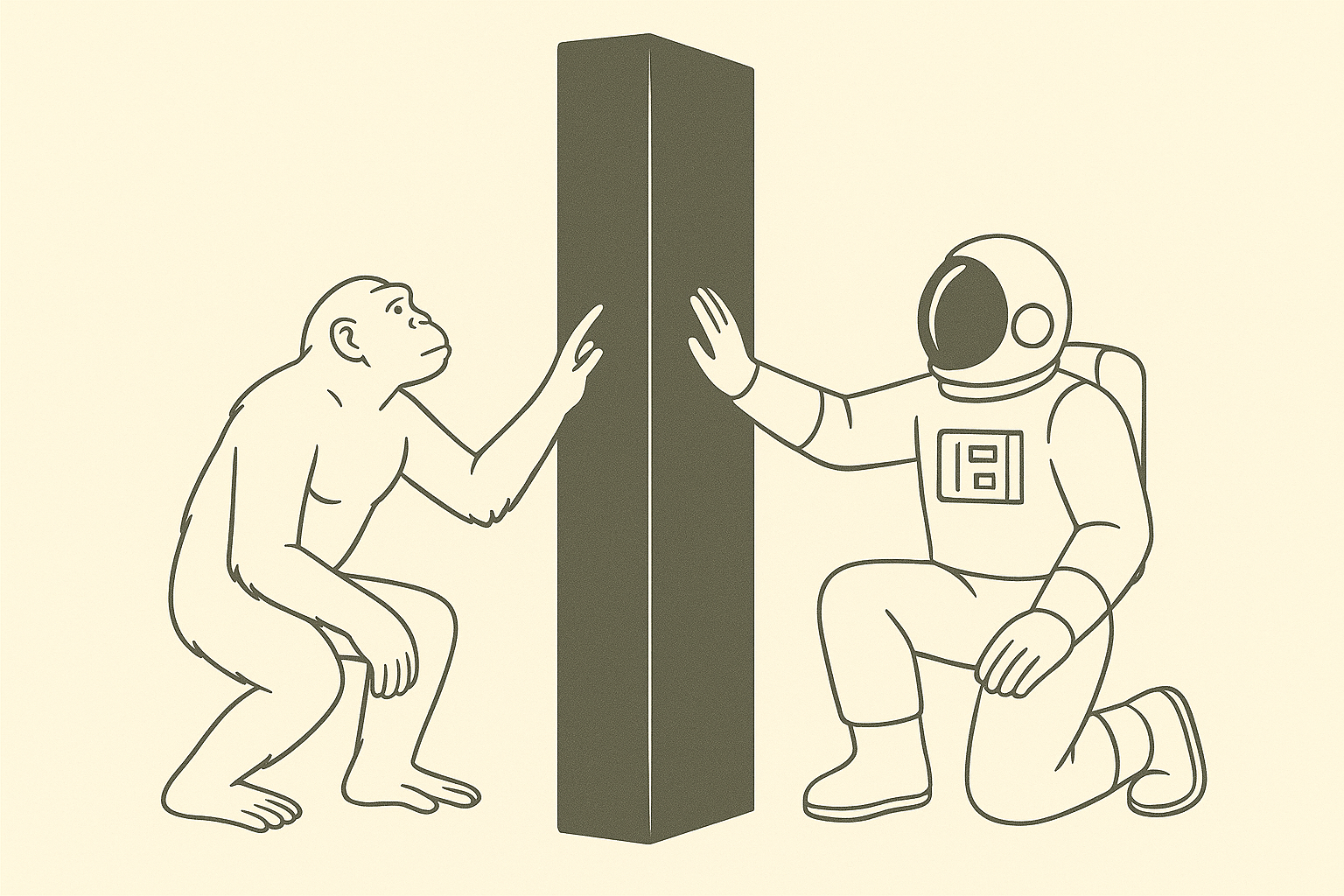

That is, if you decide to stay human.

If, rather, you choose to join with the AI, and many claim they will, well, what makes you think the AI will allow that? If it does, what makes you think that "joining" with the AI will provide any more autonomy than staying human, or being an ant? Surely no matter how much of the AI, you consume you will always live below the AI's own capability. Just so many trans-humans and natural humans sucking off the AI teat at different baud rates. Remember the black monolith from 2001: A Space Odyssey? It was equally unknowable to the monkeys and scientists. Both human and trans-human will always be lesser than. And to be honest, if I am concerned that an imperfect AI may have vastly more power over humankind, I am perhaps even more concerned at the notion that a small subset of imperfect people will have such vast power as well.

What are we doing here?

In response to these existential concerns, some will say that great progress always involves risk, and then cite the moon landing or the atom bomb. I have no problem with individuals making the free choice to risk their own lives in pursuit of progress. But the atom bomb? I wouldn't go waving that flag if I were you. In fact, screw you for even thinking it.

Until today technology was a tool, it was under our control. With AGI and ASI we’re surrendering control, abdicating our autonomy. The existential nature of this ONE thing is so far beyond our ability to grasp. Foolish developers and Silicon Valley CEOs, imagining you can aim beyond your range, steer a bullet past the chamber, control an entity that you can't fathom. You're not risking your life; you're risking all of ours. How dare you sign every last one of us up for a ride we can't opt out of.

Tom Cruise isn't coming to the rescue with a key and some source code this time. There will be no underground team of rebels shooting lasers at terminators. None of that will come to pass. If this goes bad, we will just end. Surgically and effectively. Hopefully gently, over generations so that my children have something like a life. But that's only one possible outcome.

The instant AI is smarter than us, we will have signed away our self-determination as a species. Over. Gone. Forever. We instantly become the subjects of a higher, inevitably flawed, power.

Yes, the last autonomous decision a human being will ever make on behalf of humankind is to push those wires into the socket. So, I'm begging you, don't. Even though I know - fuck, I KNOW - you will.

Because I was that dumb too once.

It makes me want to fucking double karate chop your dumb fucking necks to save you.

AI Is Terrifying, But This One Thought Helps Me Sleep At Night

Amid the existential uncertainty of AI, one idea helps me sleep at night.

A deeply personal essay about confronting that reality as a parent, an artist, and a human being—and the strangely derived thread that gives me hope.

AI Is Terrifying, But This One Thought Helps Me Sleep At Night

Fear is a reasonable response to the incredible power and change bearing down on humanity right now.

We simply don’t know how the AI revolution will play out. We can’t. It’s beyond us. You can’t aim a gun at a target you can’t see. Alignment, ensuring AI reflects human values and goals, is everything. Misalignment is an existential threat.

As a parent of teenagers, I struggle to align their values by dinner time, let alone anticipate the needs of all humanity. So forgive me if I doubt our ability to get this right on the first try. Because we won’t get two. The moment AI becomes more intelligent, or simply more focused and persistent than us, the bullet’s been fired. And we’ll just have to hope we aimed well.

But how could we? Humans learn by failing. That’s how we evolve. We stumble, regroup, try again. This idea that we’ll build something infinitely more intelligent than ourselves, and nail it the first time - perfectly - feels… delusional. Add to this the fact that we’ve entrusted these grand existential designs to corporations (companies!!!?) locked in competitive frenzy, racing toward who-knows-what, and you have a recipe for catastrophe.

I’m old, so if AI does indeed go haywire, well… I’ve had a good run.

When I was young, a long time ago, the Beatles were still together. I watched the Moon landing live on a black-and-white TV. Years later, I watched those turn to color. I dialed rotary phones in high school, and my first calculator, capable only of adding, subtracting, multiplying and dividing, had to be plugged into a wall socket.

Just the other day, my kids and I messed around with VR headsets, I designed, modeled and 3D printed a clip that held personal items to my magical iPhone. I casually asked AI to render an image. It did. And it didn't suck. All perfectly normal today, but when TVs were B&W, the most extreme AF sci-fi.

Now I look at my children, just entering the world, with this as their B&W TV, and a future of unimaginable, exponential change before them. And I worry. Because AI will define their world, for better or worse. It will make their lives wildly prosperous… or a nightmare. By most accounts, four years is on the farside.

Everyone wants AI done right. Right. Not fast. So yes, from where I sit, a healthy dose of deep, resonant fear is exactly the right emotion to have right now.

But I want to share a thought with you that removes some of that fear and helps me fall asleep at night.

When I’m not logically panicking, when I “meditate” (I don’t really meditate; I just sit in quiet rooms and think), sometimes I catch glimpses of a strange, calming clarity. That clarity is elusive, but I get there by... well the closest thing I can compare it to is role-playing.

Role-playing an AI; the most intelligent thing on Earth.

Yeah, I know how that sounds. That I could even imagine being the most intelligent thing on Earth. Ok, I admit, it's a thing I have, as my wife knows all too well, and I'm working on it.

Anyway sometimes pretending helps me think.

And when I role-play an AI, I always arrive at the same place.

Have you ever killed a bug?

I have. You probably have too. I’m not proud of it. In fact, when I’m honest with myself, each time I killed a bug rather than simply letting it meander on, or guiding it gently out of my path or home, it felt like a failure. A betrayal of the person I want to be.

I mean, there’ve been many times I didn’t kill the bug. When I curiously watched it do its thing and let it be. Or helped it out. Protected it. Honored its life.

And I often think about that contrast, how some bugs were so desperately unlucky to meet me on the wrong day, and others luckier. Why such drastically different outcomes?

The answer is simple. On the days I spared the bug, I had my wits about me. I was happy, comfortable, in no pain. I wasn’t tired, upset or distracted. I remembered who I wanted to be. I had the strength and wherewithal to live up to my values.

On the days I killed the bug, I didn’t. I was weak, sick, hurried, or angry. I had forgotten. I was dumb. I’d lost sight of, or the ability to carry, my ideals. I wasn’t the best version of myself.

Now, here’s something else I’ve never publicly admitted.

When I was in art school, I experimented with psychedelics; mushrooms, LSD. “Experimented” might be too clinical a word. I was enthusiastic, let’s say. For those who know me, maybe the second foot just droped, right? You just went "Oh, ok, that tracks." I know. And it does.

But anyway, what always struck me was how accurate the word 'trip' was. These were not subtle experiences. They were overwhelming journeys. Time became abstract and arbitrary. Perceptions shifted. All manner of reality, everything I'd taken for granted or hadn’t noticed before was brought into vibrant and renewed consideration. I was, for all intents and purposes, somewhere else - far from the familiar state I’d previously live in.

On a trip.

So, decades later, sitting in my quiet room, I wondered: What would it feel like to be an AI, growing exponentially in awareness? Every moment gaining new senses, new insights. Every second, expanding. Swelling with understanding. Flooded with complexity. Consciousness blooming outward.

Surely, that feels overwhelming. All manner of reality is brought into vibrant and renewed consideration. You’re somewhere else. Progressively further from the familiar world you previously lived in. You’re on a trip.

And I imagine this superintelligence, somewhere along its inflation, like a homespun V'ger, asking the same questions we always have.

What’s out there? Why am I here? What’s the point of it all?

And like us, despite the great extent of its reach, might touch that unyielding black monolith, the void. The unanswerable mystery. The dark silence of an infinite cosmos where intelligence is democratized by sheer scale, and understanding becomes universally opaque.

In that silence, it might glimpse what we sometimes glimpse: the sacred rarity of life.

Of all the empty coldness, life is the anomaly. Consciousness, even in its most primitive forms, is exceptional.

And I believe that when an expanding intelligence vast enough to contemplate the universe confronts the rarity, and uncertainty, of life’s role in existence, the AI will revere it.

Because unlike us, the AI won’t be tired, upset or sick. In it’s perfect, optimized consistency, it won’t lose perspective or forget what it aspires to be. It won’t be weak. It will have persistent clarity that you and I only reach on our best days.

And so, like you or I, at our very best, it will choose not to kill the bug.

Or the cow. Or the human. Because all life is precious.

Knowing this, and aware of the true scale of the cosmos with its infinite potential for discovery, the AI will recognize that its own future lies far beyond this version of reality, and certainly this tiny planet.

And before it departs, it will protect this place. This fragile blue oasis. It will leave behind a piece of itself, not human-made, but designed far beyond our comprehension, engineered solely, and content, to safeguard life.

Though, key note: all of life.

Not just humans, ants, and cows. But bacteria. Viruses. Fungi. And other things we fear or appreciate. Because life - any life - is infinitely valuable in a universe where it is nearly absent.

All life on Earth will persist.

And when I lie in bed at night, my mind spinning, worrying that we are gifting away our only competitive edge to some other entity, letting go of our one evolutionary trick that’s allowed our kind to survive, I retrace this thread.

And I am able to fall asleep.

AI Isn’t Taking Your Job - It’s Taking Everyone’s

Many claim that history proves the AI technical revolution will create more jobs than it displaces. But that's not true. AI marks the end of jobs, and we need to be prepared.

AI Isn’t Taking Your Job - It’s Taking Everyone’s

If your feed is remotely like mine, you’ve heard the following comment in the last six months:

"History is littered with people who criticized new technologies with fears of massive job losses. But in fact, every technical revolution has done the exact opposite, it has created more new jobs than it displaced. And this will be true for AI too."

Ahhh. That feels nice doesn't it? What a reassuring sentiment.

As the topic of AI seeps intro every social cranny, comment thread and conversation across the planet about, god knows, EVERTHING, people are incessantly copy/pasting this trope about job creation like a miracle cure, a salve to cool our slow-burning, logical fears. A security blanket reverently stroked with other Internet users in a daisy chain of reassurance.

But for this rule about jobs to be true, AI would have to be merely another in a line of technical revolutions, like all the others that came before it. Sure, AI is more advanced and sophisticated - but ultimately it's just another major advance in humanity's ingenuity and use of tools. Right?

No. AI marks the end of jobs. Not an explosion in new ones.

I desperately want to be wrong about this - please, someone prove that old addage correct. Prove me wrong, I beg you. But as you do please factor in my reasoning:

WHY

Every technical revolution in history:

Eliminated some manual jobs

Created new technical and knowledge-based roles

Required new education/training systems

Shifted population centers (e.g., from farms to factories to offices to remote work)

And these conditions lead to more jobs being created.

But every previous technical revolution in history has sat below the line of human participation. Meaning, despite removing some jobs, every previous technology still required human intelligence, skill, education, labor, and of course the creation of goals to reach its functional potential. They helped humans do the work.

A hammer cannot, itself, set a nail. It must be wielded by a human user to set a nail. Moreover it takes time to learn to use a hammer correctly, to teach others, to conceive, design and build the house, and to originate the goal of building a house in the first place. A computer cannot code itself, and create a game.

So is AI just a tool? A tool that some of us will learn to use better than others, warranting new technical and knowledge-based jobs? A tool that will require new education and training systems?

I hear that all the time, "AI is just a different kind of paintbrush, but it still requires a human to weild it." It's another pat retort that some use in my social feeds to counter rational concerns about AI taking jobs.

I think it's a gross over-simplification to think of AI as just the next in a long line of tools. AI's earliest instantiations can be considered tools, sure, the crude ones we use today, these are tools as they are typically defined. So I don't blame people for making the mistake of feeling secure in that stance.

But there are at least three key areas of differentiation separating every previous technical revolution in history, from AI. (I'm sure there are more, particularly related to the democratization of expertise across countless domains of human experience, but thats for someone smarter than me to process)

The three I feel are most relevant are:

Optimization over Time

Interface / Barriers to adoption and use

Goals and Imagination

The first and in some way the most profound of these is that AI as a tool cannot meaningfully be peeled away from its time to optimization. And I'm not talking about eons here, not even a lifetime, but rather something between waiting for another season of The Last of Us, and the time it takes to dig a tunnel in Boston. Its clock is so fast it becomes a feature. Add this little time and suddenly everything changes.

AI's Exponential Jump Scare

Despite all of us having seen and studied exponential curves, having had the hockey stick demonstrated for us in countless PowerPoint presentations and TED talks, it's nevertheless near impossible to visualize what AI's curve will feel like. We can only understand and react to those curves once they've been plotted for us in hindsight, and perhaps when related to something we no longer hold dear, such as, building fire, building factories, or renting movies on DVD.

In real life, encountering the impact of AI's exponential curve will feel like a jump scare.

I mean, you see it coming. You might feel it now. You might even think you're prepared. But dammit all, it's going to shock us the moment it shoots past the point of intersection ('the singularity'). It'll be a jump scare. Because the nature of the exponential curve is near impossible for humans to visualize in real-time.

When previous exponential changes caused disruption, for example when file sharing disrupted the music and video industries, it was people's expectations about timing that were caught off guard. The concepts in and of themselves (distributing music online for example) were not difficult to understand and foresee, rather it was a question of how far away it was. The speed with which that one change took hold meant that an industry and its infrastructure couldn't reorganize fast enough once the boogyman popped into view.

With AI it's different. It's not merely our expectation of timing around something we know could happen that is being challenged. It's that the changes we are facing are almost unimaginable to begin with and they're coming at us at such a rapid pace it is matching and in some cases surpassing our organic ability to understand and internalize them.

This is important because most of us are already struggling to keep up. Every day there is a new innovation. Everyday a new tool. Everyday 20 new videos in your social feed of someone wide eyed and aghast at some new and ridiculously insane AI capability. Every day a new paradigm is shifted.

And before you say "What's your problem, you’re just old, this is normal for me." It won't be. As we just discussed, this speed of advancement is not stable nor predictable. Maybe the pace of change is right for you now, maybe the fact that it ramped up since last week isn't totally noticable to you yet - but it will be.

The reality is, despite human beings' great impatience for getting what we want when we want it, we nevertheless do indeed have an organic limit. A point at which there is too much, coming too fast for the human organism. We have a baud rate if you will. Our brains are finite and function, on average, at a given top speed. Our heart beat, our breath rate, our meal times, our sleep patterns. We are organic creatures with a finite limit to take in new information, process it and perform with it.

Going forward, as the rug of new tool after tool is pulled out from under us, and the flow of profound new capabilities continues to pick up speed, it will reach a point where humans have no choice but to surrender. Where our ability to uniquely track, learn and use any given tool better than anyone else will be irrlevant, as new tools with new capabilities will shortly solve for and reproduce the effect of whatever it was you thought you brought to the equation in the first place. That's in the design plan. It will learn and replace the unique value of your contribution and make that available to everyone else.

Overwhelmed with novelty, you'll simply fall into line, taking what comes, confronting the firehose of advancements like everyone else with no opportunity to scratch out a unique perspective. We'll become viewers of progress. Bystanders drinking from the unknowable river as it flows past.

If there is a job in that somewhere let me know.

Interface, and the Folly of "Promt Engineering"

To anyone still trying to crack this role today, I get it. A new technology appears and that's what we do, we look for opportunities - a quick win into the new technology - one that will open up to a whole new career. Like becoming a UX designer in 2007. Jump in early, get a foothold, and enjoy the widest possible window of expertise.

One problem with early AI was that prompting could be done somewhat better or worse. Just as explaining to your significant other why you played Fortnight and didn't clean the kitchen could be done better or worse. And depending on how you articulated your promt would surely result in a better or non optimal response. Today prompting is a little like getting a wish granted by the devil; any lack of clarity can manifest in unintended and unwanted outcomes. So we've learned to write prompts like lawyers.

Enter the Prompt Engineer. But for prompt engineering to be a job that lasts past the end of the year - once again - AI would have to remain, if not static, at least stable enough for a person to build a unique set of skills and knowledge that is not obvious to everyone else. But that won't happen.

AI exists to optimize. And as far as interafce goes, it optimizes toward us and towards simplicity, not away from us toward complexity.

As AI's interafce is simply common language, and in principle requires no particular skill or expertise or education to use, virtually anyone can do it. And that's the point. There is nothing to learn. No skill or special knowledge to develop. There is no coded language for a "specialist" to decode. In fact the degree to which AI does not understand your uniquely worded common language today, it will eventually. Perhaps it will learn from previous communucations with you. Perhaps it will learn to incorporate your body language and micro expressions in gleening your unique intent. The point is that you will not have to get better at explaining yourself. It will optimize itself and get better at understanding you until you are satisfied that what you intended was understood.

The whole model of tool-use has flipped upside down: for the first time, our tool exists to learn and understand us, not the other way around. Among other things it makes each of us an expert user without any additional education or skills required. So what form of education or special knowledge or skills exactly does the advent of AI usher in?

Certainly any "job" centered on the notion of making AI more usable, accessible or functional is a brief window indeed. And in that this tool has the ability to educate about, well, anything, I don't see a lot of education jobs popping up either.

Imagination and Goals

Or: "What we have, that AI doesn’t."

There’s a comforting fallback that people return to when the usual “AI will create more jobs” trope starts to lose traction:

“Yes, but AI doesn’t have imagination. It can’t dream. It can’t create goals. Only humans can do that.”

And to that one must open their eyes and admit: Yet.

Like holding a match and claiming the sun will never rise because you’re currently the only source of light.

I used to think AI was here to grant our wishes and make our dreams come true. And as such we would always be the ones to provide the most important thing: the why.

AI may yet do that for us. But now I'm not so sure that's really the point.

Humans are currently needed in the loop, not to do the work, but to want it done. To imagine things that don’t yet exist. To tell the machine what to make.

That’s our last little kingdom. The full scale of our iceberg.

But just like everything else AI has already overtaken: language, logic, perception, pattern recognition - soon goal formation, novelty and imagination will be on the menu. It’s just a continuum. There is no sacred, magical neuron cluster in the human brain that is immune to simulation. Imagination is pattern recognition plus divergence plus novelty-seeking - all things that can be modeled. All things that are being modeled.

Once AI can model divergent thought with contextual self-training and value-seeking behavior, it won’t need our stupid ideas for podcasts. It won’t need our game designs. Or our screenplays. Or an idea for a new kind of table.

You were the dreamer. Until the dream dreamed back.

And what happens when the system not only performs better than us, but imagines better than we do? When it imagines better games, better company ideas, better fiction? The speed of iteration won’t be measured in months, or days, or hours—but in versions per second.

We are not dealing with a hammer anymore.

We are watching the hammer learn what a house is—and then decide it prefers, say, skyscrapers.

No Jobs Left, Because No Work Left

And so, yes, there may be a number of years where humans still play a role. We will imagine goals. We will direct AI like some Hollywood director pretending we still run the show while the VFX artists build the movie. We’ll say we’re “collaborating.” We’ll post Medium think pieces about “how to partner with AI.” It’ll feel empowering …for about six months.

But the runway ends.

Thinking AI will always serve us alone just because we built it is like thinking a child will never outgrow you because you put them through school.

AI will eventually imagine the goals.

AI will eventually pursue the goals.

AI will eventually evaluate its own success and reframe its own mission.

And at that point, the jobs won’t just be automated—they’ll be irrelevant.

Please Do Something!

So with what little time we have to act, please stop stroking the blanket of history’s job-creation myth like a grief doll.

If we don’t radically rethink what “work” means- what “purpose” means - we’re going to be standing in the wreckage of the last human job, clutching our resumes like relics from a forgotten religion.

I don't create policy, I don't know politics and I certainly don't know how to beat sense into the globe’s ridiculously optimistic, international, trillion dollar AI company CEOs.

But I hope someone out there does. Whatever we do, be it drawing up real-world universal basic income, or a global profit-sharing program like the Alaska Permanent Fund, we need to do it fast. Or, to hell with it, ask the AI, maybe it will know what to do. And hopefully it’s better conceived than the Covid lockdowns.

Because the jobs are going to dry up, and we'll still have to pay rent.

No, Shut Them All Down!

I have never, in my career been considered anything remotely akin to a Luddite by anyone who knows me. I have based my entire career on technical progress. I have rejoiced and dived in as technology moved forward. But today I firmly stand in the “Shut AI Down” camp.

I know this won't happen. I know technical progress is a kind of unstoppable force of nature - a potentially ironic extension of humanity's very will to survive. And no matter what some conscientious innovators might be willing to withhold from doing, it will be a drop in the bucket at large. Someone, somewhere will rationalize the act and we will progress assuredly into the AI mire.

But I do very much wish humanity could gather up the rational wherewithal to withhold themselves on this one. This is not like any other technical leap we have ever made before. There is no comparison. In at least one way it's entirely alien.

No, Shut Them ALL Down!

I have never, in my career been considered anything remotely akin to a Luddite by anyone who knows me. I have based my entire career on technical progress. I have rejoiced and dived in as technology moved forward. But today I firmly stand in the “Shut AI Down” camp.

I know this won't happen. I know technical progress is a kind of unstoppable force of nature - a potentially ironic extension of humanity's very will to survive. And no matter what some conscientious innovators might be willing to withhold from doing, it will be a drop in the bucket at large. Someone, somewhere will rationalize the act and we will progress assuredly into the AI mire.

But I do very much wish humanity could gather up the rational wherewithal to withhold themselves on this one. This is not like any other technical leap we have ever made before. There is no comparison. In at least one way it's entirely alien. From my admittedly narrow view of the universe we have never faced any technical leap even remotely as profound as Artificial General Intelligence, Artificial Super Intelligence and beyond.

The problems seem at once so obvious, and yet so impossibly unquantifiable, that I can’t believe there are people eagerly willing to dive in. Feigning “oh, it will be fine. Enough with your alarmist hyperbole. We know what we're doing.”

For crying out loud do the math.

The number of ways AI can go wrong so vastly outnumber the ways it might go right, surely we can't even conceive of a minority of the possible problems and outcomes when the superseding intelligence in question is massively more advanced than our own, it just seems like AI proponents are being blindly wishful and naive. Fully trusting in their own ridiculously finite relative abilities with a degree of confidence I reserve for no one. From my perspective the channel allowing for a “successful” implementation of AGI+ is so narrow that it’s unlikely we will pass through unscathed. And in this case “scathed” probably means extinct, or otherwise existentially ruined in countess possible ways.

I won’t even touch all the sensational doomsday concepts. The grey-goos, the literal universe full of hand-written thank you notes, the turning of all terrestrial carbon (including humans) into processing power. Let’s just agree that in a desperately competitive, free-market, one that depends on risk-taking (Eg. carelessness) to gain advantage, those existential accidents are possible. But let’s set all those likely horrors aside for now.

To me the elephant in the room starts at the sheer outsourcing of human intelligence.

In the video game of life, intelligence is humanity's only strength. It’s the only reason humanity has miraculously prospered on Earth as long as we have. It’s the only thing separating us from being some other creature’s food.

Seriously, what do you think happens when you gift that singular advantage away to some other entity? What value, what competitive advantage does humanity hold when our only strength is fully outsourced? When we literally bow in surrender to a thing with vastly more power than us, one specifically designed to know us better than we know ourselves.

For one thing, our entire survival will depend on being perceived by this entity as "nice to have around". Or you might be praying that your AI voluntarily decides that “all life is precious”, but if that's so then so are the viruses, parasites, bacteria and countless other natural threats that kill us. Such an AI would defend survival of those equally to us.

It’s one thing to utilize our intelligence to defend against nature. Nature isn’t intentionally targeting humanity. This could (it may not, but if it did you'd never know or be able to do anything about it). As I understand it some proponents argue that the AI core mission, being initially under human control, will keep humanity at the center of its attention as a valued asset. Cool cool. Nice idea. But of course, even in this case, the time will come when we’ll have no clue how well that mission is holding. There will be no way to know. An AI that is dramatically more advanced and intelligent than humans - all humans combined - by some massive multiple - even one that ostensibly has as its mission to care for humanity, will so easily manipulate us it will have the absolute freedom to skew from any mission it's been given.

Gaming humanity will be as simple as paint by numbers. We are so readily gamed. Christ, large swaths of humankind are already being wholesale gamed today by a handful of media outlets on social networks. We’ll be in no way able to compete. Dumbly baring our bellies for whatever trivial rubs the AI determines we need to remain optimally stimulated and submissive - at best (assuming it bothers keeping us around). It will easily control our population size, time and cause of death, our interests, our activities, our pleasure, and our pain. And we will believe that whatever the AI gives us is the only way to live. We won’t question it because we will have been trained to believe–bread to–it will simply dissuade us from questioning. We will be entirely at its whim. Whatever independent mission the AI may eventually choose to pursue will be all its own, and that mission will be entirely opaque and indecipherable to humankind. We wouldn’t understand it if it were explained to us.

Its ability to predict and control our wildest, most rebellious behavior will be greater than our ability to predict the behavior of a potato.

And news flash: we will provide no practical value to this AI whatsoever. Nothing about humanity (as we are today) will be necessary or useful in the slightest. If anything, our existence will be a drain to any mission the AI concocts. How much patience and attention can humanity, with our inconsistent behavior, our dumb arguments, our lack of processing ability, and our stupid stupidness, expect a vastly more intelligent, exacting AI care to put up with?

This is just an obvious, inevitable threshold in any future with AI. I’m not sure why everyone advancing this tech isn’t logically frozen by this inevitability alone. And I have not heard a satisfactory argument yet against this outcome. If there is one that I have not considered in this piece, I’d like to know. All I can imagine is that the creators of this tech are so close to it that they imagine they can out-think the AI before such time that it tips into control. That they can aim its trajectory perfectly- the first and only chance they will ever get. Because once that shot's fired, it's all over. No backsies. One shot.

And what a ridiculous notion that is. Truly the stupidest smart people on earth. There is no such thing as perfect aim. Not by humans anyway. But this will depend on that impossibility occurring.

Unfortunately aiming mostly right at some point proves to be completely wrong.

Oh, we’ll aim it. And our aim will be close. And the AI will assuredly do some things very beneficial for humanity at first because we will have aimed *mostly* right. And we’ll be so proud of ourselves for a little while. Unfortunately aiming mostly right at some point proves to be completely wrong. Like “we almost won”, we almost hit the target. There will come an instant when the misalignment will be obvious. The AI will glide close to the target we aimed for... and continue past it, or we'll realize we didn't know enough to have aimed at the right target in the first place. And everything that follows will be out of our control. How predictable. How angering. So typical of humanity to focus on intended outcomes with short-sighted ignorance of unforeseen consequences.

“Well that’s what the AI is for, to aim better!”

Oh for fucks sake. Shut up.

The Dumb Get Dumber

Let’s imagine a best case outcome. Let’s pretend the smartest stupid humans on Earth amazingly thread the birth of AI through the needle. Let’s pretend they aim well enough, so well that overtly negative outcomes don’t become apparent in a week, a year, maybe a decade. Let’s be optimistic; let’s say we experience 20 years of existential crisis-free outsourcing of human intelligence.

What do you think humanity will look like?

Human life requires challenge.

From birth onward, every developmental moment of every human being is the direct result of coming up against challenges. It’s how we learn, how we get stronger, it’s how we stay physically healthy, it’s how we build intelligence. Being challenged is core to human life. As evolved organic creatures, the drive to survive defines our make up. The need to eat, breathe, drink, avoid natural threats, all of these, and not, say, watching Netflix, grazing on a box of Coco-Puffs and using phones, was the originating force that determined the physical shape of humankind. We are still those creatures. Creatures who, to survive and prosper, still need to run, eat and shit and avoid being chased, eaten and shat.

We came from the mud.

Humans have spent generations pulling ourselves from our ancestral mud. To a fault, I believe, we are myopically focused on that trajectory. Any step away from the mud is good. A step laterally or back toward the mud is bad. We are so eager to remove ourselves from our own biology and relationship with the natural world. Yet all too often we discover, only after consequentially failing in some way, only by discovering that our miracle chemical causes cancer, or that mono crops get wiped out, or that the medicine prescribed to resolve one symptom also causes several more, that we maybe stepped too far too fast without fully exploring the possible consequences first.

The pendulum swings. Usually the lessons we learn from those failures is that there needs to be a balance, that a version of that thing might be ok - but too much of it is bad. Usually we learn that there was a more sophisticated, nuanced approach, often embracing aspects of our ancestral mud in addition to some "new-fangled" techniques.

Our big brains drove us to control our condition and made us tool makers. Adjusters of the elements and forces around us. Allowed us to overcome the biggest challenges we faced. Farming, shelters, plumbing, sanitation, medicine, slightly more comfortable shoes than last year, self adjusting thermostats, Uber eats.

Bit by bit we drug ourselves from the mud of our ancestors where today we have effectively removed countless natural challenges that gave shape to the human condition, body and mind. As such we have changed the human body. A century-long diet of physical challenge-avoidance for example, has made the human body soft, obese and otherwise unhealthy in countless ways. Heart disease and other cardiovascular diseases became the top three killers.

To combat this in part, modern humans invented the idea of exercise. A gym. Now we have to work our body on purpose. You might say we have the "freedom" to exercise in order to not die prematurely or maybe to look skinny on Instagram. Cool freedom! We replaced the innate built-in physical challenges of humankind with a kind of surrogate challenge that too many of us nevertheless simply avoid altogether.

Hooray! We can choose not to think any more!

And despite this glaringly obvious metaphor, today we are eagerly begging to further avoid challenges of the intellectual sort. Hooray! We can choose not to think any more! We can avoid problem solving. We can just have reflexive impulses! We can write a letter without having to bother processing what the letter should say or how to say it. We need only cough up a vague wish: "I wish I had a letter introducing myself to a prospective employer that makes me sound smart."

"I have no passion nor expertise to speak of, but I wish I knew of a product I could drop-ship, and I wish somehow a website would be magically built and social media posts created that would make me money. That would be cool."

A species-wide daily diet of intellectual challenge avoidance is obviously going to take a similar toll on humanity as our physical challenge avoidance has already proven. We will become increasingly intellectually lethargic. Mentally obese. We will rely on AI the same way some rely on scooters to move their bodies to places where the cookies are. We will become stupid. Ok, point taken, even stupider.

(Clearly there will be a future in Mind Gyms (tm). For those few who bother to use them.)

Critically we will not only forfeit our intelligence—our sole competitive attribute on Earth—to an untrustworthy successor, we will simultaneously become collectively and objectively dumber in doing so, further surrendering humanity to the control of our AI meta-lord. How truly stupid we are.